Jonathan Zittrain requests your comments on his article, "The Generative Internet." It was first published by The Harvard Law Review, and he's now expanding it into a book. Zittrain is Professor of Internet Governance and Regulation, Oxford University, and Jack N. & Lillian R.

Berkman Visiting Professor of Entrepreneurial Legal Studies at Harvard Law School.

I will let him explain his request in his own words: I've written before on the Microsoft antitrust case and on SCO (see http://papers.ssrn.com/sol3/papers.cfm?abstract_id=174110 and http://papers.ssrn.com/sol3/papers.cfm?abstract_id=529862 respectively). I've just finished a new paper on the future of the Net, in which I extol its open qualities but fear that a focus on an open Internet can too often exclude worrying about an open PC -- which I define in a broader fashion than the divide between free and proprietary software typically contemplates. I think it's critically important that users retain general purpose PCs, even some with proprietary OSes, instead of "information appliances." I fear these appliances, like TiVo, can come to predominate -- or that the PC itself will morph towards becoming one, with new gatekeepers determining what code will or won't run on them, rather than the users themselves. As I shift from the paper towards a book on the subject, I'd like to know where the argument could use improvement or elaboration. Any comments appreciated, and I'd be happy to try to respond to them or to questions. ...JZ" I think he'd particularly like your input on the technical aspects of the paper. He is aware that not everyone will agree with every point he makes, but in true Open Source fashion, he's interested in hearing what you have to say, knowing that Groklaw readers have a high level of computer skills, for the most part, and that thoughtful comments can be expected.

The paper's downloadable as a PDF at SSRN, with free registration. Or, it's available at Harvard Law Review. (More papers by Professor Zittrain can be found here.) I've done a text version, with the author's permission, and with a lot of help from Carlo Graziani's bot, some new scripts from Mathfox, and some OCR and HTML help from jnc. I could not have done this without their help. The pagination is to match the PDF, as opposed to the Harvard Law Review's page numbering. Note that this article is copyrighted, and thus is not to be mirrored elsewhere. If you click on the footnote numbers, you can jump back and forth easily. Also, the various headings are linked.

******************************

THE GENERATIVE INTERNET

Jonathan L. Zittrain

TABLE OF CONTENTS

I. INTRODUCTION ......................2

II. A MAPPING OF GENERATIVE TECHNOLOGIES

A. Generative Technologies Defined ........7

1. Capacity for Leverage ........8

2. Adaptability..................8

3. Ease of Mastery................8

4. Accessibility ..................9

5. Generativity Revisited............9

B. The Generative PC ........9

C. The Generative Internet ........14

D. The Generative Grid ...........21

III. GENERATIVE DISCONTENT ..........23

A. Generative Equilibrium ........23

B. Generativity as Vulnerability: The Cybersecurity Fulcrum .............30

1. A Threat Unanswered and Unrealized...........30

2. The PC/Network Grid and a Now-Realized Threat......35

IV. A POSTDILUVIAN INTERNET ...........40

A. Elements of a Postdiluvian Internet ......40

1. Information Appliances ............41

2. The Appliancized PC...............44

B. Implications of a Postdiluvian Internet: More Regulability, Less Generativity ..........48

V. HOW TO TEMPER A POSTDILUVIAN INTERNET .........54

A. Refining Principles of Internet Design and Governance .........56

1. Superseding the End-to-End Argument........56

2. Reframing the Internet Governance Debate .........59

3. Recognizing Interests in Tension with Generativity.......61

4. “Dual Machine” Solutions .........63

B. Policy Interventions ........64

1. Enabling Greater Opportunity for Direct Liability .......64

2. Establishing a Choice Between Generativity and Responsibility .......65

VI. CONCLUSION .........66

THE GENERATIVE INTERNET

Jonathan L. Zittrain*

The generative capacity for unrelated and unaccredited audiences to build and distribute code and content through the Internet to its tens of millions of attached personal computers has ignited growth and innovation in information technology and has facilitated new creative endeavors. It has also given rise to regulatory and entrepreneurial backlashes. A further backlash among consumers is developing in response to security threats that exploit the openness of the Internet and of PCs to third-party contribution. A shift in consumer priorities from generativity to stability will compel undesirable responses from regulators and markets and, if unaddressed, could prove decisive in closing today’s open computing environments. This Article explains why PC openness is as important as network openness, as well as why today’s open network might give rise to unduly closed endpoints. It argues that the Internet is better conceptualized as a generative grid that includes both PCs and networks rather than as an open network indifferent to the configuration of its endpoints. Applying this framework, the Article explores ways — some of them bound to be unpopular among advocates of an open Internet represented by uncompromising end-to-end neutrality — in which the Internet can be made to satisfy genuine and pressing security concerns while retaining the most important generative aspects of today’s networked technology.

I. INTRODUCTION

From the moment of its inception in 1969,1 the Internet has been

designed to serve both as a means of establishing a logical network

and as a means of subsuming existing heterogeneous networks while allowing those networks to function independently — that is, both as a set of building blocks and as the glue holding the blocks together.2

2

The Internet was also built to be open to any sort of device: any computer or other information processor could be part of the new network so long as it was properly interfaced, an exercise requiring minimal technical effort.3 These principles and their implementing protocols have remained essentially unchanged since the Internet comprised exactly two network subscribers,4 even as the network has grown to reach hundreds of millions of people in the developed and developing worlds.5

Both mirroring and contributing to the Internet’s explosive growth

has been the growth of the personal computer (PC): an affordable, multifunctional device that is readily available at retail outlets and easily reconfigurable by its users for any number of purposes. The audience writing software for PCs, including software that is Internet capable, is itself massive and varied.6 This diverse audience has driven the variety of applications powering the rapid technological innovation to which we have become accustomed, in turn driving innovation in expressive endeavors such as politics, entertainment, journalism, and education.7

Though the openness of the Internet and the PC to third-party contribution is now so persistent and universal as to seem inevitable, these

3

technologies need not have been configured to allow instant contribution from a broad range of third parties. This Article sketches the generative features of the Internet and PCs, along with the alternative configurations that these instrumentalities defeated. This Article then describes the ways in which the technologies’ openness to third-party innovation, though possessing a powerful inertia that so far has resisted regulatory and commercial attempts at control, is increasingly at odds with itself.

Internet technologists have for too long ignored Internet and PC security threats that might precipitate an unprecedented intervention into the way today’s Internet and PCs work. These security threats are more pervasive than the particular vulnerabilities arising from bugs in a particular operating system (OS): so long as OSs permit consumers to run third-party code — the sine qua non of a PC OS — users can execute malicious code and thereby endanger their own work and that of others on the network. A drumbeat of trust-related concerns plausibly could fuel a gradual but fundamental shift in consumer demand toward increased stability in computing platforms. Such a shift would interact with existing regulatory and commercial pressures — each grounded in advancing economically rational and often legally protected interests — to create solutions that make it not merely possible, but likely, that the personal computing and networking environment of tomorrow will be sadly hobbled, bearing little resemblance to the one that most of the world enjoys today.

The most plausible path along which the Internet might develop is

one that finds greater stability by imposing greater constraint on, if not outright elimination of, the capacity of upstart innovators to demonstrate and deploy their genius to large audiences. Financial transactions over such an Internet will be more trustworthy, but the range of its users’ business models will be narrow. This Internet’s attached consumer hardware will be easier to understand and will be easier to use for purposes that the hardware manufacturers preconceive, but it will be off limits to amateur tinkering. Had such a state of affairs evolved by the Internet’s twenty-fifth anniversary in 1994, many of its formerly unusual and now central uses would never have developed because the software underlying those uses would have lacked a platform for exposure to, and acceptance by, a critical mass of users.

Those who treasure the Internet’s generative features must assess

the prospects of either satisfying or frustrating the growing forces against those features so that a radically different technology configuration need not come about. Precisely because the future is uncertain, those who care about openness and the innovation that today’s Internet and PC facilitate should not sacrifice the good to the perfect — or the future to the present — by seeking simply to maintain a tenuous technological status quo in the face of inexorable pressure to change. Rather, we should establish the principles that will blunt the most un-

4

appealing features of a more locked-down technological future while acknowledging that unprecedented and, to many who work with information technology, genuinely unthinkable boundaries could likely become the rules from which we must negotiate exceptions.

Although these matters are of central importance to cyberlaw, they

have generally remained out of focus in our field’s evolving literature, which has struggled to identify precisely what is so valuable about today’s Internet and which has focused on network openness to the exclusion of endpoint openness. Recognition of the importance and precariousness of the generative Internet has significant implications for, among other issues, the case that Internet service providers (ISPs) should unswervingly observe end-to-end neutrality, the proper focus of efforts under the rubric of “Internet governance,” the multifaceted debate over digital rights management (DRM), and prospects for Internet

censorship and filtering by authoritarian regimes.

Scholars such as Professors Yochai Benkler, Mark Lemley, and

Lawrence Lessig have crystallized concern about keeping the Internet “open,” translating a decades-old technical end-to-end argument concerning simplicity in network protocol design into a claim that ISPs should refrain from discriminating against particular sources or types of data.8 There is much merit to an open Internet, but this argument is really a proxy for something deeper: a generative networked grid. Those who make paramount “network neutrality” derived from end-to-end theory confuse means and ends, focusing on “network” without regard to a particular network policy’s influence on the design of network endpoints such as PCs. As a result of this focus, political advocates of end-to-end are too often myopic; many writers seem to presume current PC and OS architecture to be fixed in an “open” position. If they can be persuaded to see a larger picture, they may agree to some compromises at the network level. If complete fidelity to end-to-end network neutrality persists, our PCs may be replaced by information appliances or may undergo a transformation from open platforms to gated communities to prisons, creating a consumer information environment that betrays the very principles that animate end-to-end theory.

The much-touted differences between free and proprietary PC OSs

may not capture what is most important to the Internet’s future. Proprietary systems can remain “open,” as many do, by permitting unaffiliated third parties to write superseding programs and permitting PC

5

owners to install these programs without requiring any gatekeeping by the OS provider. In this sense, debates about the future of our PC experience should focus less on such common battles as Linux versus Microsoft Windows, as both are “open” under this definition, and more on generative versus nongenerative: understanding which platforms will remain open to third-party innovation and which will not.

The focus on the management of domain names among those participating in dialogues about Internet governance is equally unfortunate. Too much scholarly effort has been devoted to the question of institutional governance of this small and shrinking aspect of the Internet landscape.9 When juxtaposed with the generativity problem, domain names matter little. Cyberlaw’s challenge ought to be to find ways of regulating — though not necessarily through direct state action — which code can and cannot be readily disseminated and run upon the generative grid of Internet and PCs, lest consumer sentiment and preexisting regulatory pressures prematurely and tragically terminate the grand experiment that is the Internet today.

New software tools might enable collective regulation if they can

inform Internet users about the nature of new code by drawing on knowledge from the Internet itself, generated by the experiences of others who have run such code. Collective regulation might also entail new OS designs that chart a middle path between a locked-down PC on the one hand and an utterly open one on the other, such as an OS that permits users to shift their computers between “safe” and “experimental” modes, classifying new or otherwise suspect code as suited for only the experimental zone of the machine.

In other words, to Professor Lessig and those more categorically

libertarian than he, this Article seeks to explain why drawing a bright line against nearly any form of increased Internet regulability is no longer tenable. Those concerned about preserving flexibility in Internet behavior and coding should participate meaningfully in debates about changes to network and PC architecture, helping to ameliorate rather than ignore the problems caused by such flexibility that, if left unchecked, will result in unfortunate, hamhanded changes to the way mainstream consumers experience cyberspace.

An understanding of the value of generativity, the risks of its excesses, and the possible means to reconcile the two, stands to reinvigorate the debate over DRM systems. In particular, this understanding promises to show how, despite no discernable movement toward the

6

locked-down dystopias Professors Julie Cohen and Pamela Samuelson predicted in the late 1990s,10 changing conditions are swinging consumer sentiment in favor of architectures that promote effective DRM. This realignment calls for more than general opposition to laws that prohibit DRM circumvention or that mandate particular DRM schemes: it also calls for a vision of affirmative technology policy — one that includes some measure of added regulability of intellectual property — that has so far proven elusive.

Finally, some methods that could be plausibly employed in the

Western world to make PCs more tame for stability’s sake can greatly affect the prospects for Internet censorship and surveillance by authoritarian regimes in other parts of the world. If PC technology is inaptly refined or replaced to create new points of control in the distribution of new code, such technology is horizontally portable to these regimes — regimes that currently effect their censorship through leaky network blockages rather than PC lockdown or replacement.

II. A MAPPING OF GENERATIVE TECHNOLOGIES

To emphasize the features and virtues of an open Internet and PC,

and to help assess what features to give up (or blunt) to control some of the excesses wrought by placing such a powerfully modifiable technology into the mainstream, it is helpful to be clear about the meaning of generativity, the essential quality animating the trajectory of information technology innovation. Generativity denotes a technology’s overall capacity to produce unprompted change driven by large, varied, and uncoordinated audiences. The grid of PCs connected by the Internet has developed in such a way that it is consummately generative. From the beginning, the PC has been designed to run almost any program created by the manufacturer, the user, or a remote third party and to make the creation of such programs a relatively easy task. When these highly adaptable machines are connected to a network with little centralized control, the result is a grid that is nearly completely open to the creation and rapid distribution of the innovations of technology-savvy users to a mass audience that can enjoy those innovations without having to know how they work.

7

A. Generative Technologies Defined

Generativity is a function of a technology’s capacity for leverage

across a range of tasks, adaptability to a range of different tasks, ease of mastery, and accessibility.

1. Capacity for Leverage. — Our world teems with useful objects,

natural and artificial, tangible and intangible; leverage describes the extent to which these objects enable valuable accomplishments that otherwise would be either impossible or not worth the effort to achieve. Examples of devices or systems that have a high capacity for leverage include a lever itself (with respect to lifting physical objects), a band saw (cutting them), an airplane (getting from one place to another), a piece of paper (hosting written language), or an alphabet (constructing words). A generative technology makes difficult jobs easier. The more effort a device or technology saves — compare a sharp knife to a dull one — the more generative it is. The greater the variety of accomplishments it enables — compare a sharp Swiss Army knife to a sharp regular knife — the more generative it is.11

2. Adaptability. — Adaptability refers to both the breadth of a

technology’s use without change and the readiness with which it might be modified to broaden its range of uses. A given instrumentality may be highly leveraging, yet suited only to a limited range of applications. For example, although a plowshare can enable the planting of a variety of seeds, planting is its essential purpose. Its comparative leverage quickly vanishes when devoted to other tasks, such as holding doors open, and it is not readily modifiable for new purposes. The same goes for swords (presuming they are indeed difficult to beat into plowshares), guns, chairs, band saws, and even airplanes. In contrast, paper obtained for writing can instead (or additionally) be used to wrap fish. A technology that offers hundreds of different additional kinds of uses is more adaptable and, all else equal, more generative than a technology that offers fewer kinds of uses. Adaptability in a tool better permits leverage for previously unforeseen purposes.

3. Ease of Mastery. — A technology’s ease of mastery reflects how

easy it is for broad audiences both to adopt and to adapt it: how much skill is necessary to make use of its leverage for tasks they care about, regardless of whether the technology was designed with those tasks in mind. An airplane is neither easy to fly nor easy to modify for new purposes, not only because it is not inherently adaptable to purposes other than transportation, but also because of the skill required to make whatever transformative modifications might be possible and

8

because of the risk of physical injury if one poorly executes such modifications. Paper, in contrast, is readily mastered: children can learn how to use it the moment they enter preschool, whether to draw on or to fold into a paper airplane (itself much easier to fly and modify than a real one). Ease of mastery also refers to the ease with which people might deploy and adapt a given technology without necessarily mastering all possible uses. Handling a pencil takes a mere moment to understand and put to many uses even though it might require innate artistic talent and a lifetime of practice to achieve da Vincian levels of mastery with it. That is, much of the pencil’s generativity stems from how useful it is both to the neophyte and to the master.

4. Accessibility. — The more readily people can come to use and

control a technology, along with what information might be required to master it, the more accessible the technology is. Barriers to accessibility can include the sheer expense of producing (and therefore consuming) the technology, taxes and regulations imposed on its adoption or use (for example, to serve a government interest directly or to help private parties monopolize the technology through intellectual property law), and the use of secrecy and obfuscation by its producers to maintain scarcity without necessarily relying upon an affirmative intellectual property interest.12

By this reckoning of accessibility, paper and plowshares are highly

accessible, planes hardly at all, and cars somewhere in the middle. It might be easy to learn how to drive a car, but cars are expensive, and the privilege of driving, once earned by demonstrating driving skill, is revocable by the government. Moreover, given the nature of cars and driving, such revocation is not prohibitively expensive to enforce effectively.

5. Generativity Revisited. — As defined by these four criteria,

generativity increases with the ability of users to generate new, valuable uses that are easy to distribute and are in turn sources of further innovation. It is difficult to identify in 2006 a technology bundle more generative than the PC and the Internet to which it attaches.

B. The Generative PC

The modern PC’s generativity flows from a separation of hardware

from software.13 From the earliest days of computing, this separation has been sensible, but it is not necessary. Both hardware and software comprise sets of instructions that operate upon informational inputs to

create informational outputs; the essential difference is that hardware

9

is not easy to change once it leaves the factory. If the manufacturer knows enough about the computational task a machine will be asked to perform, the instructions to perform the task could be “bolted in” as hardware. Indeed, “bolting” is exactly what is done with an analog adding machine, digital calculator, “smart” typewriter, or the firmware within a coffeemaker that enables it to begin brewing at a user-selected time. These devices are all hardware and no software. Or, as some might say, their software is inside their hardware.

The essence — and genius — of software standing alone is that it

allows unmodified hardware to execute a new algorithm, obviating the difficult and expensive process of embedding that algorithm in hardware.14 PCs carry on research computing’s tradition of separating hardware and software by allowing the user to input new code. Such code can be loaded and run even once the machine is solely in the consumer’s hands. Thus, the manufacturer can write new software after the computer leaves the factory, and a consumer needs to know merely how to load the cassette, diskette, or cartridge containing the software to enjoy its benefits. (In today’s networked environment, the consumer need not take any action at all for such reprogramming to take place.) Further, software is comparatively easy for the manufacturer to develop because PCs carry on another sensible tradition of their institutional forbears: they make use of OSs.15 OSs provide a higher level of abstraction at which the programmer can implement his or her algorithms, allowing a programmer to take shortcuts when creating software.16 The ability to reprogram using external inputs provides adaptability; OSs provide leverage and ease of mastery.

Most significant, PCs were and are accessible. They were designed

to run software not written by the PC manufacturer or OS publisher, including software written by those with whom these manufacturers had no special arrangements.17 Early PC manufacturers not only published the documentation necessary to write software that would run on their OSs, but also bundled high-level programming languages

along with their computers so that users themselves could learn to

10

write software for use on their (and potentially others’) computers.18 High-level programming languages are like automatic rather than manual transmissions for cars: they do not make PCs more leveraged, but they do make PCs accessible to a wider audience of programmers.19 This increased accessibility enables programmers to produce software that might not otherwise be written.

That the resulting PC was one that its own users could — and did

— program is significant. The PC’s truly transformative potential is fully understood, however, as a product of its overall generativity.

PCs were genuinely adaptable to any number of undertakings by

people with very different backgrounds and goals. The early OSs lacked capabilities we take for granted today, such as multitasking, but they made it possible for programmers to write useful software with modest amounts of effort and understanding.20

Users who wrote their own software and thought it suited for general use could hang out the shingle in the software business or simply share the software with others. A market in third-party software developed and saw a range of players, from commercial software publishers employing thousands of people, to collaborative software projects that made their outputs freely available, to hobbyists who showcased and sold their wares by advertising in computer user magazines or through local computer user groups.21 Such a range of developers enhanced the variety of applications that were written not only because accessibility arguably increased the sheer number of people

coding, but also because people coded for different reasons. While

11

hobbyists might code for fun, others might code out of necessity, desiring an application but not having enough money to hire a commercial software development firm. And, of course, commercial firms could provide customized programming services to individual users or could develop packaged software aimed at broad markets.

This variety is important because the usual mechanisms that firms

use to gauge and serve market demand for particular applications may not provide enough insight to generate every valuable application. It might seem obvious, for example, that a spreadsheet would be of great use to someone stuck with an adding machine, but the differences between the new application and the old method may prevent firms from recognizing the former as better serving other tasks. The PC, however, allowed multiple paths for the creation of new applications: Firms could attempt to gauge market need and then write and market an application to meet that need. Alternatively, people with needs could commission firms to write software. Finally, some could simply write the software themselves.

This configuration — a varied audience of software writers, most

unaffiliated with the manufacturers of the PCs for which they wrote software — turned out to benefit the manufacturers, too, because greater availability of software enhanced the value of PCs and their OSs. Although many users were tinkerers, many more users sought PCs to accomplish particular purposes nearly out of the box; PC manufacturers could tout to the latter the instant uses of their machines provided by the growing body of available software.

Adapting a metaphor from Internet architecture, PC architecture

can be understood as shaped like an hourglass.22 PC OSs sit in the narrow middle of the hourglass; most evolved slowly because proprietary OSs were closed to significant outside innovation. At the broad bottom of the PC hourglass lies cheap, commoditized hardware. At the broad top of the hourglass sits the application layer, in which most PC innovation takes place. Indeed, the computer and its OS are products, not services, and although the product life might be extended through upgrades, such as the addition of new physical memory chips or OS upgrades, the true value of the PC lies in its availability and stability as a platform for further innovation — running applications from a variety of sources, including the users themselves, and thus lev-

12

eraging the built-in power of the OS for a breathtaking range of tasks.23

Despite the scholarly and commercial attention paid to the debate

between free and proprietary OSs,24 the generativity of the PC depends little on whether its OS is free and open or proprietary and closed — that is, whether the OS may be modified by end users and third-party software developers. A closed OS like Windows can be and has been a highly generative technology. Windows is generative, for instance, because its application programming interfaces enable a programmer to rework nearly any part of the PC’s functionality and give external developers ready access to the PC’s hardware inputs and outputs, including scanners, cameras, printers, monitors, and mass storage. Therefore, nearly any desired change to the way Windows works can be imitated by code placed at the application level.25 The qualities of an OS that are most important have to do with making computing power, and the myriad tasks it can accomplish, available for varied audiences to adapt, share, and use through the ready creation and exchange of application software. If this metric is used, debates over whether the OS source code itself should be modifiable are secondary.

Closed but generative OSs — largely Microsoft DOS and then Microsoft Windows — dominate the latter half of the story of the PC’s rise, and their early applications outperformed their appliance-based counterparts. For example, despite the head start from IBM’s production of a paper tape–based word processing unit in World War II,

13

dedicated word processing appliances26 have been trounced by PCs running word processing software. PC spreadsheet software has never known a closed, appliance-based consumer product as a competitor;27 PCs have proven viable substitutes for video game consoles as platforms for gaming;28 and even cash registers have been given a run for their money by PCs configured to record sales and customer information, accept credit card numbers, and exchange cash at retail points of sale.29

The technology and market structures that account for the highly

generative PC have endured despite the roller coaster of hardware and software producer fragmentation and consolidation that make PC industry history otherwise heterogeneous.30 These structures and their implications can, and plausibly will, change in the near future. To understand these potential changes, their implications, and why they should be viewed with concern and addressed by action designed to minimize their untoward effects, it is important first to understand roughly analogous changes to the structure of the Internet that are presently afoot. As the remainder of this Part explains, the line between the PC and the Internet has become vanishingly thin, and it is no longer helpful to consider them separately when thinking about the future of consumer information technology and its relation to innovation and creativity.

C. The Generative Internet

The Internet today is exceptionally generative. It can be leveraged:

its protocols solve difficult problems of data distribution, making it much cheaper to implement network-aware services.31 It is adaptable

14

in the sense that its basic framework for the interconnection of nodes is amenable to a large number of applications, from e-mail and instant messaging to telephony and streaming video.32 This adaptability exists in large part because Internet protocol relies on few assumptions about the purposes for which it will be used and because it efficiently scales to accommodate large amounts of data and large numbers of users.33 It is easy to master because it is structured to allow users to design new applications without having to know or worry about the intricacies of packet routing.34 And it is accessible because, at the functional level, there is no central gatekeeper with which to negotiate access and because its protocols are publicly available and not subject to intellectual property restrictions.35 Thus, programmers independent of the Internet’s architects and service providers can offer, and consumers can accept, new software or services.

How did this state of affairs come to pass? Unlike, say, FedEx,

whose wildly successful offline package delivery network depended initially on the financial support of venture capitalists to create an efficient physical infrastructure, those individuals thinking about the Internet in the 1960s and 1970s planned a network that would cobble together existing networks and then wring as much use as possible from them.36

The network’s design is perhaps best crystallized in a seminal 1984

paper entitled End-to-End Arguments in System Design.37 As this paper describes, the Internet’s framers intended an hourglass design, with a simple set of narrow and slow-changing protocols in the middle, resting on an open stable of physical carriers at the bottom and any number of applications written by third parties on the top. The Internet hourglass, despite having been conceived by an utterly different group of architects from those who designed or structured the market

15

for PCs, thus mirrors PC architecture in key respects. The network is indifferent to both the physical media on which it operates and the nature of the data it passes, just as a PC OS is open to running upon a variety of physical hardware “below” and to supporting any number of applications from various sources “above.”

The authors of End-to-End Arguments describe, as an engineering

matter, why it is better to keep the basic network operating protocols simple — and thus to implement desired features at, or at least near, the endpoints of the networks.38 Such features as error correction in data transmission are best executed by client-side applications that check whether data has reached its destination intact rather than by the routers in the middle of the chain that pass data along.39 Ideas that entail changing the way the routers on the Internet work — ideas that try to make them “smarter” and more discerning about the data they choose to pass — challenge end-to-end philosophy.

The design of the Internet also reflects both the resource limitations

and intellectual interests of its creators, who were primarily academic researchers and moonlighting corporate engineers. These individuals did not command the vast resources needed to implement a global network and had little interest in exercising control over the network or its users’ behavior.40 Energy spent running the network was seen as a burden; the engineers preferred to design elegant and efficient protocols whose success was measured precisely by their ability to run without effort. Keeping options open for growth and future development was seen as sensible,41 and abuse of the network was of little worry because the people using it were the very people designing it — a culturally homogenous set of people bound by their desire to see the network work.

Internet designers recognized the absence of persistent connections

between any two nodes of the network,42 and they wanted to allow additions to the network that neither taxed a central hub nor required centrally managed adjustments to overall network topography.43 These constraints inspired the development of a stateless protocol — one that did not presume continuous connections or centralized knowledge of those connections — and packet switching,44 which broke all

16

data into small, discrete packets and permitted multiple users to share a connection, with each sending a snippet of data and then allowing someone else’s data to pass. The constraints also inspired the fundamental protocols of packet routing, by which any number of routers in a network — each owned and managed by a different entity — maintain tables indicating in which rough direction data should travel without knowing the exact path to the data’s final destination.45

A flexible, robust platform for innovation from all corners sprang

from these technical and social underpinnings. Two further historical developments assured that an easy-to-master Internet would also be extraordinarily accessible. First, the early Internet consisted of nodes primarily at university computer science departments, U.S. government research units, and select technology companies with an interest in cutting-edge network research.46 These institutions collaborated on advances in bandwidth management and tools for researchers to use for communication and discussion.47 But consumer applications were nowhere to be found until the Internet began accepting commercial interconnections without requiring academic or government research justifications, and the population at large was solicited to join.48 This historical development — the withering away of the norms against commercial use and broad interconnection that had been reflected in a National Science Foundation admonishment that its contribution to the functioning Internet backbone be used for noncommercial purposes49 — greatly increased the Internet’s generativity. It opened development of networked technologies to a broad, commercially driven audience that individual companies running proprietary services did not think to invite and that the original designers of the Internet would not have thought to include in the design process.

A second historical development is easily overlooked because it

may in retrospect seem inevitable: the dominance of the Internet as the network to which PCs connected, rather than the emergence of proprietary networks analogous to the information appliances that PCs themselves beat. The first large-scale networking of consumer PCs

17

took place through self-contained “walled garden” networks like CompuServe, The Source, and Prodigy.50 Each network connected its members only to other subscribing members and to content managed and cleared through the network proprietor. For example, as early as 1983, a home computer user with a CompuServe subscription was able to engage in a variety of activities — reading news feeds, sending e-mail, posting messages on public bulletin boards, and participating in rudimentary multiplayer games (again, only with other CompuServe subscribers).51 But each of these activities was coded by CompuServe or its formal partners, making the network much less generatively accessible than the Internet would be. Although CompuServe entered into some development agreements with outside software programmers and content providers,52 even as the service grew to almost two million subscribers by 1994, its core functionalities remained largely unchanged.53

The proprietary services could be leveraged for certain tasks, and

their technologies were adaptable to many purposes and easy to master, but consumers’ and outsiders’ inability to tinker easily with the services limited their generativity.54 They were more like early video game consoles55 than PCs: capable of multiple uses, through the development of individual “cartridges” approved by central management, yet slow to evolve because potential audiences of developers were slowed or shut out by centralized control over the network’s services.

The computers first attached to the Internet were mainframes and

minicomputers of the sort typically found within university computer science departments,56 and early desktop access to the Internet came through specialized nonconsumer workstations, many running variants

18

of the UNIX OS.57 As the Internet expanded and came to appeal to nonexpert participants, the millions of PCs in consumer hands were a natural source of Internet growth.58 Despite the potential market, however, no major OS producer or software development firm quickly moved to design Internet protocol compatibility into PC OSs. PCs could access “walled garden” proprietary services, but their ability to run Internet-aware applications locally was limited.

A single hobbyist took advantage of PC generativity and produced

and distributed the missing technological link. Peter Tattam, an employee in the psychology department of the University of Tasmania, wrote Trumpet Winsock, a program that allowed owners of PCs running Microsoft Windows to forge a point-to-point Internet connection with the servers run by the nascent ISP industry.59 Ready consumer access to Internet-enabled applications such as Winsock, coupled with the development of graphical World Wide Web protocols and the PC browsers to support them — all initially noncommercial ventures — marked the beginning of the end of proprietary information services and peer-to-peer telephone-networked environments like electronic bulletin boards. After recognizing the popularity of Tattam’s software, Microsoft bundled the functionality of Winsock with later versions of Windows 95.60

As PC users found themselves increasingly able to access the Internet, proprietary network operators cum content providers scrambled to reorient their business models away from corralled content and toward accessibility to the wider Internet.61 These online service providers quickly became mere ISPs, with their users branching out to the thriving Internet for programs and services.62 Services like CompuServe’s “Electronic Mall” — an e-commerce service allowing outside vendors, through arrangements with CompuServe, to sell products to subscrib-

19

ers63 — were lost amidst an avalanche of individual websites selling goods to anyone with Internet access.

The greater generativity of the Internet compared to that of proprietary networked content providers created a network externality: as more information consumers found their way to the Internet, there was more reason for would-be sources of information to set up shop there, in turn attracting more consumers. Dispersed third parties could and did write clients and servers for instant messaging,64 web browsing,65 e-mail exchange,66 and Internet searching.67 Furthermore, the Internet remained broadly accessible: anyone could cheaply sign on, immediately becoming a node on the Internet equal to all others in information exchange capacity, limited only by bandwidth. Today, due largely to the happenstance of default settings on consumer wireless routers, Internet access might be free simply because one is sitting on a park bench near the apartment of a broadband subscriber who has “gone wireless.”68

The resulting Internet is a network that no one in particular owns

and that anyone can join. Of course, joining requires the acquiescence of at least one current Internet participant, but if one is turned away at one place, there are innumerable others to court, and commercial ISPs provide service at commoditized rates.69 Those who want to offer services on the Internet or connect a formerly self-contained local network — such as a school that wants to link its networked computers

20

to the Internet — can find fast and reliable broadband Internet access for several hundred dollars per month.70 Quality of service (QoS) — consistent bandwidth between two points71 — is difficult to achieve because the Internet, unlike a physical distribution network run by one party from end to end such as FedEx, comprises so many disparate individual networks. Nevertheless, as the backbone has grown and as technical innovations have both reduced bandwidth requirements and staged content “near” those who request it,72 the network has proven remarkably effective even in areas — like person-to-person video and audio transmission — in which it at first fell short.

D. The Generative Grid

Both noncommercial and commercial enterprises have taken advantage of open PC and Internet technology, developing a variety of Internet-enabled applications and services, many going from implementation to popularity (or notoriety) in a matter of months or even days. Yahoo!, Amazon.com, eBay, flickr, the Drudge Report, CNN. com, Wikipedia, MySpace: the list of available services and activities could go into the millions, even as a small handful of Web sites and applications account for a large proportion of online user activity.73 Some sites, like CNN.com, are online instantiations of existing institutions; others, from PayPal to Geocities, represent new ventures by formerly unestablished market participants. Although many of the offerings created during the dot-com boom years — roughly 1995 to 2000

21

— proved premature at best and flatly ill-advised at worst, the fact remains that many large companies, including technology-oriented ones, ignored the Internet’s potential for too long.74

Significantly, the last several years have witnessed a proliferation of

PCs hosting broadband Internet connections.75 The generative PC has become intertwined with the generative Internet, and the whole is now greater than the sum of its parts. A critical mass of always-on computers means that processing power for many tasks, ranging from difficult mathematical computations to rapid transmission of otherwise prohibitively large files, can be distributed among hundreds, thousands, or millions of PCs.76 Similarly, it means that much of the information that once needed to reside on a user’s PC to remain conveniently accessible — documents, e-mail, photos, and the like — can instead be stored somewhere on the Internet.77 So, too, can the programs that a user might care to run.

This still-emerging “generative grid” expands the boundaries of leverage, adaptability, and accessibility for information technology. It also raises the ante for the project of cyberlaw because the slope of this innovative curve may nonetheless soon be constrained by some of the very factors that have made it so steep. Such constraints may arise because generativity is vulnerability in the current order: the fact that tens of millions of machines are connected to networks that can convey reprogramming in a matter of seconds means that those computers stand exposed to near-instantaneous change. This kind of generativity keeps publishers vulnerable to the latest tools of intellectual property infringement, crafted ever more cleverly to evade monitoring and control, and available for installation within moments everywhere. It also opens PCs to the prospect of mass infection by a computer virus that exploits either user ignorance or a security vulnerability that allows code from the network to run on a PC without approval by its owner. Shoring up these vulnerabilities will require substantial changes in some aspects of the grid, and such changes are sure to affect the current level of generativity. Faced with the prospect of such changes, we

22

must not fight an overly narrow if well-intentioned battle simply to preserve end-to-end network neutrality or to focus on relative trivialities like domain names and cookies. Recognizing the true value of the grid — its hypergenerativity — along with the magnitude of its vulnerabilities and the implications of eliminating those vulnerabilities, leads to the realization that we should instead carefully tailor reforms to address those vulnerabilities with minimal impact on generativity.

III. GENERATIVE DISCONTENT

To appreciate the power of the new and growing Internet backlash

— a backlash that augurs a dramatically different, managed Internet of the sort that content providers and others have unsuccessfully strived to bring about — one must first identify three powerful groups that may find common cause in seeking a less generative grid: regulators (in part driven by threatened economic interests, including those of content providers), mature technology industry players, and consumers. These groups are not natural allies in technology policy, and only recently have significant constituencies from all three sectors gained momentum in promoting a refashioning of Internet and PC architecture that would severely restrict the Internet’s generativity.

A. Generative Equilibrium

Cyberlaw scholarship has developed in three primary strands.

First, early work examined legal conflicts, usually described in reported judicial opinions, arising from the use of computers and digital networks, including the Internet.78 Scholars worked to apply statutory or common law doctrine to such conflicts, at times stepping back to dwell on whether new circumstances called for entirely new legal approaches.79 Usually the answer was that they did not: these scholars

23

mostly believed that creative application of existing doctrine, directly or by analogy, could resolve most questions. Professors such as David Johnson and David Post disagreed with this view, maintaining in a second strand of scholarship that cyberspace is different and therefore best regulated by its own sets of rules rather than the laws of territorial governments.80

In the late 1990s, Professor Lessig and others argued that the debate was too narrow, pioneering yet a third strand of scholarship. Professor Lessig argued that a fundamental insight justifying cyberlaw as a distinct field is the way in which technology — as much as the law itself — can subtly but profoundly affect people’s behavior: “Code is law.”81 He maintained that the real projects of cyberlaw are both to correct the common misperception that the Internet is permanently unregulable and to reframe doctrinal debates as broader policy-oriented ones, asking what level of regulability would be appropriate to build into digital architectures. For his part, Professor Lessig argued that policymakers should typically refrain from using the powers of regulation through code — powers that they have often failed, in the first instance, to realize they possess.82

The notion that code is law undergirds a powerful theory, but some

of its most troubling empirical predictions about the use of code to restrict individual freedom have not yet come to pass. Work by professors such as Julie Cohen and Pamela Samuelson has echoed Professor Lessig’s fears about the ways technology can unduly constrain individ-

24

ual behavior — fears that include a prominent subset of worries about socially undesirable digital rights management and “trusted systems.”83

Trusted systems are systems that can be trusted by outsiders

against the people who use them.84 In the consumer information technology context, such systems are typically described as “copyright management” or “rights management” systems, although such terminology is loaded. As critics have been quick to point out, the protections afforded by these systems need not bear any particular relationship to the rights granted under, say, U.S. copyright law.85 Rather, the possible technological restrictions on what a user may do are determined by the architects themselves and thus may (and often do) prohibit many otherwise legal uses. An electronic book accessed through a rights management system might, for example, have a limitation on the number of times it can be printed out, and should the user figure out how to print it without regard to the limitation, no fair use defense would be available. Similarly, libraries that subscribe to electronic material delivered through copyright management systems may find themselves technologically incapable of lending out that material the way a traditional library lends out a book, even though the act of lending is a privilege — a defense to copyright infringement for unlawful “distribution” — under the first sale doctrine.86

The debate over technologically enforced “private” copyright

schemes in the United States grew after the Digital Millennium Copy-

25

right Act87 (DMCA) was signed into law with a raft of complex anti-circumvention provisions.88 These provisions are intended to support private rights management schemes with government sanctions for their violation. They provide both criminal and civil penalties for those who use, market, or traffic in technologies designed to circumvent technological barriers to copyright infringement. They also penalize those who gain mere “access” to such materials, a right not reserved to the copyright holder.89 For example, if a book has a coin slot on the side and requires a would-be reader to insert a coin to read it, one who figures out how to open and read it without inserting a coin might be violating the anticircumvention provisions — even though one would not be directly infringing the exclusive rights of copyright.

These are the central fears of the “code is law” theory as applied to

the Internet and PCs: technical barriers prevent people from making use of intellectual works, and nearly any destruction of those barriers, even to enable lawful use, is unlawful. But these fears have largely remained hypothetical. To be sure, since the DMCA’s passage a number of unobtrusive rights management schemes have entered wide circulation.90 Yet only a handful of cases have been brought against those who have cracked such schemes,91 and there has been little if any decrease in consumers’ capacity to copy digital works, whether for fair use or for wholesale piracy. Programmers have historically been able to crack nearly every PC protection scheme, and those less technically inclined can go to the trouble of generating a fresh copy of a digital work without having to crack the scheme, exploiting the “analog

26

hole” by, for example, filming a television broadcast or recording a playback of a song.92 Once an unprotected copy is generated, it can then be shared freely on Internet file-sharing networks that run on generative PCs at the behest of their content-hungry users.93

Thus, the mid-1990s’ fears of digital lockdown through trusted systems may seem premature or unfounded. As a practical matter, any scheme designed to protect content finds itself rapidly hacked, and the hack (or the content protected) in turn finds itself shared with technically unsophisticated PC owners. Alternatively, the analog hole can be used to create a new, unprotected master copy of protected content. The fact remains that so long as code can be freely written by anyone and easily distributed to run on PC platforms, trusted systems can serve as no more than speed bumps or velvet ropes — barriers that the public circumvents should they become more than mere inconveniences. Apple’s iTunes Music Store is a good example of this phenomenon: music tracks purchased through iTunes are encrypted with Apple’s proprietary scheme,94 and there are some limitations on their use that, although unnoticeable to most consumers, are designed to prevent the tracks from immediately circulating on peer-to-peer networks.95 But the scheme is easily circumvented by taking music purchased from the store, burning it onto a standard audio CD, and then re-ripping the CD into an unprotected format, such as MP3.96

An important claim endures from the third “code is law” strand of

cyberlaw scholarship. Professors Samuelson, Lessig, and Cohen were right to raise alarm about such possibilities as losing the ability to read anonymously, to lend a copyrighted work to a friend, and to make fair use of materials that are encrypted for mere viewing. However, the second strand of scholarship, which includes the empirical claims

27

about Internet separatism advanced by Professor Johnson and David Post, appears to explain why the third strand’s concerns are premature. Despite worries about regulation and closure, the Internet and the PC have largely remained free of regulation for mainstream users who experience regulation in the offline world but wish to avoid it in the online world, whether to gamble, obtain illegal pornography, or reproduce copyrighted material.

The first strand, which advocates incremental doctrinal adaptation

to new technologies, explains how Internet regulators have indeed acted with a light touch even when they might have had more heavy-handed options. As I discuss elsewhere, the history of Internet regulation in the Western world has been tentative and restrained, with a focus by agile Internet regulators on gatekeeping regimes, effected through discrete third parties within a sovereign’s jurisdiction or through revisions to technical architectures.97 Although Internet regulators are powerful in that they can shape, apply, and enforce the authority of major governments, many have nevertheless proven willing to abstain from major intervention.

This lack of intervention has persisted even as the mainstream

adoption of the Internet has increased the scale of interests that Internet uses threaten. Indeed, until 2001, the din of awe and celebration surrounding the Internet’s success, including the run-up in stock market valuations led by dot-coms, drowned out many objections to and discussion about Internet use and reform — who would want to disturb a goose laying golden eggs? Further, many viewed the Internet as immutable and thought the problems it generated should be dealt with piecemeal, without considering changes to the way the network functioned — a form of “is-ism” that Professor Lessig’s third strand of cyberlaw scholarship famously challenged in the late 1990s even as he advocated that the Internet’s technology remain essentially as is, unfettered by regulation.98

By 2002 the dot-com hangover was in full sway, serving as a backdrop to a more jaundiced mainstream view of the value of the Internet. The uptake of consumer broadband, coupled with innovative but disruptive applications like the file-sharing service Napster — launched by amateurs but funded to the tune of millions of dollars during the boom — inspired fear and skepticism rather than envy and

28

mimicry among content providers. And a number of lawsuits against such services, initiated during the crest of the Internet wave, had by this time resulted in judgments against their creators.99 The woes of content providers have made for a natural starting point in understanding the slowly building backlash to the generative Internet, and they appropriately continue to inform a large swath of cyberlaw scholarship. But the persistence of the publishers’ problems and the dot-com bust have not alone persuaded regulators or courts to tinker fundamentally with the Internet’s generative capacity. The duties of Western-world ISPs to police or preempt undesirable activities are still comparatively light, even after such perceived milestones as the Digital Millennium Copyright Act and the Supreme Court’s decision against file-sharing promoters in Metro-Goldwyn-Mayer Studios Inc. v. Grokster, Ltd.100 Although there is more regulation in such countries as China and Saudi Arabia, where ISPs are co-opted to censor content and services that governments find objectionable,101 regulatory efforts are still framed as exceptions to the rule that where the Internet goes, freedom of action by its subscribers follows.

By incorporating the trajectory of disruption by the generative

Internet followed by comparatively mild reaction by regulators, the three formerly competing strands of cyberlaw scholarship can be reconciled to explain the path of the Internet: Locked-down PCs are possible but undesirable. Regulators have been unwilling to take intrusive measures to lock down the generative PC and have not tried to trim the Internet’s corresponding generative features to any significant degree. The Internet’s growth has made the underlying problems of concern to regulators more acute. Despite their unwillingness to act to bring about change, regulators would welcome and even encourage a PC/Internet grid that is less exceptional and more regulable.

29

B. Generativity as Vulnerability: The Cybersecurity Fulcrum

Mainstream firms and individuals now use the Internet. Some use

it primarily to add to the generative grid, whether to create new technology or new forms of art and other expression facilitated by that technology. Others consume intellectual work over the Internet, whether developed generatively or simply ported from traditional distribution channels for news and entertainment such as CDs, DVDs, and broadcast radio and television. Still others, who use it primarily for nonexpressive tasks like shopping or selling, embrace simplicity and stability in the workings of the technology.

Consumers hold the key to the balance of power for tomorrow’s

Internet. They are powerful because they drive markets and because many vote. If they remain satisfied with the Internet’s generative characteristics — continuing to buy the hardware and software that make it possible and to subscribe to ISPs that offer unfiltered access to the Internet at large — then the regulatory and industry forces that might otherwise wish to constrain the Internet will remain in check, maintaining a generative equilibrium. If, in contrast, consumers change their view — not simply tolerating a locked-down Internet but embracing it outright — then the balance among competing interests that has favored generative innovation will tilt away from it. Such a shift is and will continue to be propelled by an underappreciated phenomenon: the security vulnerabilities inherent in a generative Internet populated by mainstream consumers possessing powerful PCs.

The openness, decentralization, and parity of Internet nodes described in Part II are conventionally understood to contribute greatly to network security because a network without a center will have no central point of breakage. But these characteristics also create vulnerabilities, especially when united with both the flaws in the machines connecting to the network and the users’ lack of technical sophistication. These vulnerabilities have been individually noted but collectively ignored. A decentralized Internet does not lend itself well to collective action, and its vulnerabilities have too readily been viewed as important but not urgent. Dealing with such vulnerabilities collectively can no longer be forestalled, even though a locus of responsibility for action is difficult to find within such a diffuse configuration.

1. A Threat Unanswered and Unrealized. — On the evening of

November 2, 1988, a Cornell University graduate student named Robert Tappan Morris transmitted a small piece of software over the Internet from Cornell to MIT.102 At the time, few PCs were attached to the Internet; rather, it was the province of mainframes, minicom-

30

puters, and professional workstations in institutional hands.103 Morris’s software, when run on the MIT machine, sought out other nearby computers on the Internet, and it then behaved as if it were a person wanting to log onto those machines.104

The sole purpose of the software — called variously a “virus” or,

because it could transmit itself independently, a “worm” — was to propagate, attempting to crack each newly discovered computer by guessing user passwords or exploiting a known security flaw.105 It succeeded. By the following morning, a large number of machines on the Internet had ground to a near halt. Each had been running the same flavor of vulnerable Unix software that the University of California at Berkeley had made freely available.106 Estimates of the number of computers affected ranged from 1000 to 6000.107

Within a day or two, puzzled network administrators reverse engineered the worm.108 Its methods of propagation were discovered, and the Berkeley computer science department coordinated patches for vulnerable Unix systems.109 These patches were made available over the Internet, at least to those users who had not elected to disconnect their machines to prevent reinfection.110 The worm was traced to Morris, who apologized; a later criminal prosecution for the act resulted in his receiving three years’ probation, 400 hours of community service, and a $10,050 fine.111

The Morris worm was a watershed event because it was the first

virus spread within an always-on network linking always-on computers. Despite the dramatically smaller and demographically dissimi-

31

lar information technology landscape that existed when the Morris worm was released, nearly everything one needs to know about the risks to Internet and PC security today resides within this story. Responses to security breaches today have not significantly improved since the response in 1988 to the Morris worm, even though today’s computing and networking are categorically larger in scale and less tightly controlled.

The computers of the 1988 Internet could be compromised because

they were general-purpose machines, running OSs with which outsiders could become familiar and for which these outsiders could write executable code. They were powerful enough to run multiple programs and host multiple users simultaneously.112 They were generative. When first compromised, they were able to meet the demands of both the worm and their regular users, thereby buying time for the worm’s propagation before human intervention was made necessary.113 The OS and other software running on the machines were not perfect: they contained flaws that rendered them more generatively accessible than their designers intended.114 More important, even without such flaws, the machines were designed to be operated at a distance and to receive and run software sent from a distance. They were powered and attached to a network continuously, even when not in use by their owners. And the users of these machines were lackadaisical about implementing available fixes to known software vulnerabilities — and often utterly, mechanistically predictable in the passwords they chose to protect entry into their computer accounts.115

Each of these facts contributing to the vulnerabilities of networked

life remains true today, and the striking feature is how few recurrences of truly disruptive security incidents there have been since 1988. Remarkably, a network designed for communication among academic and government researchers scaled beautifully as hundreds of millions of new users signed on during the 1990s, a feat all the more impressive when one considers how technologically unsavvy most new users were in comparison to the 1988 crowd. However heedless network administrators in 1988 were of good security hygiene, mainstream consumers of the 1990s were far worse. Few knew how to manage or code their PCs, much less how to apply patches rigorously or observe good password security.

32

Network engineers and government officials did not take any significant preventive actions to forestall another Morris-type worm, despite a brief period of soul searching. Although many who reflected on the Morris worm grasped the dimensions of the problem it heralded, the problem defied easy solution because management of both the Internet and the computers attached to it was so decentralized and because any truly effective solution would cauterize the very purpose of the Internet.116

The worm did not literally “infect” the Internet itself because it did

not reprogram the Internet’s various distributed routing mechanisms and corresponding links. Instead, the burden on the network was simply increased traffic, as the worm impelled computers to communicate with each other while it attempted to spread ad infinitum.117 The computers actually infected were managed by disparate groups and individuals who answered to no particular authority for their use or for failing to secure them against compromise.118 These features led those individuals overseeing network protocols and operation to conclude that the worm was not their problem.

Engineers concerned with the Internet protocols instead responded

to the Morris worm incident by suggesting that the appropriate solution was more professionalism among coders. The Internet Engineering Task Force — the far-flung, unincorporated group of engineers who work on Internet standards and who have defined its protocols through a series of formal “request for comments” documents, or RFCs — published informational RFC 1135 as a postmortem on the worm incident.119 After describing the technical details of the worm, the RFC focused on “computer ethics” and the need to instill and enforce ethical standards as new people — mostly young computer scientists such as Morris — signed on to the Internet.120

The state of play in 1988, then, was to acknowledge the gravity of

the worm incident while avoiding structural overreaction to it. The most concrete outcomes were a criminal prosecution of Morris, an apparent consensus within the Internet technical community regarding individual responsibility for the problem, and the Department of Defense’s creation of the CERT Coordination Center to monitor the overall health of the Internet and dispatch news of security threats121 —

33

leaving preemptive or remedial action in the hands of individual computer operators.

Compared to the computers connected to the 1988 Internet, the

computers connected to the proprietary consumer networks of the 1980s described in Part II were not as fundamentally vulnerable because those networks could be much more readily purged of particular security flaws without sacrificing the very foundations of their existence.122 For example, although it would have been resource intensive, CompuServe could have attempted to scan its network traffic at designated gateways to prevent the spread of a worm. More important, no worm could have spread through CompuServe in the manner of Morris’s because the computers attached to CompuServe were configured as mere “dumb terminals”: they exchanged data, not executable files, with CompuServe and therefore did not run software from the CompuServe network. Moreover, the mainframe computers at CompuServe with which those dumb terminals communicated were designed to ensure that the line between users and programmers was observed and digitally enforced.123

Like CompuServe, the U.S. long-distance telephone network of the

1970s was intended to convey data — in the form of telephone conversations — rather than code between consumers. In the early 1970s, several consumers who were curious about the workings of the telephone network discovered that telephone lines used a tone at a frequency of 2600 Hertz to indicate that they were idle.124 As fortune would have it, a toy whistle packaged as a prize in boxes of Cap’n Crunch cereal could, when one hole was covered, generate exactly that tone.125 People in the know could dial toll-free numbers from their home phones, blow the whistle to clear but not disconnect the line, and then dial a new, non–toll free number, which would be connected without incurring a charge.126 When this imaginative scheme came to light, AT&T reconfigured the network so that the 2600-Hertz tone no longer controlled it.127 Indeed, the entire notion of in-band signaling was eliminated so that controlling the network required something other than generating sound into the telephone mouthpiece. To accomplish this reconfiguration, AT&T separated the data and executa-

34

ble code in the network — a natural solution in light of the network’s centralized control structure and its purpose of carrying data, not hosting user programming.

An understanding of AT&T’s long-distance telephone network and

its proprietary information service counterparts, then, reveals both the benefit and the bane of yesterday’s Internet and today’s generative grid: the Internet’s very channels of communication are also channels of control. What makes the Internet so generative is that it can transmit both executable code to PCs and data for PC users. This duality allows one user to access her news feeds while another user creates a program that aggregates hundreds of news feeds and then provides that program to millions of other users. If one separated data from the executable code as AT&T or CompuServe did, it would incapacitate the generative power of the Internet.

CompuServe’s mainframes were the servers, and the consumer

computers were the clients. On AT&T’s network, both the caller and the call’s recipient were “clients,” with their link orchestrated by a centralized system of switches — the servers. On the Internet of both yesterday and today, a server is definable only circularly: it is a computer that has attached itself to the Internet and made itself available as a host to others — no more, no less.128 A generative PC can be used as a client or it can be turned into a website that others access. Lines between consumer and programmer are self-drawn and self-enforced.

Given this configuration, it is not surprising that there was little

momentum for collective action after the Morris worm scare. The decentralized, nonproprietary ownership of the Internet and its computers made it difficult to implement any structural revisions to the way they worked. And more important, it was simply not clear what curative steps would not entail drastic and purpose-altering changes to the very fabric of the Internet: the notion was so wildly out of proportion to the level of the perceived threat that it was not even broached.

2. The PC/Network Grid and a Now-Realized Threat. — In the absence of significant reform, then, how did the Internet largely dodge further bullets? A thorough answer draws on a number of factors, each instructive for the situation in which the grid finds itself today.

First, the computer scientists were right that the ethos of the time

frowned upon destructive hacking.129 Even Morris’s worm arguably

35

did more damage than its author had intended, and for all the damage it did cause, the worm had no payload other than itself. Once the worm compromised a system, it would have been trivial for Morris to have directed the worm, say, to delete as many files as possible. Like Morris’s worm, the overwhelming majority of viruses that followed in the 1990s reflected similar authorial restraint130: they infected simply for the purpose of spreading further. Their damage was measured both by the effort required to eliminate them and by the burden placed upon network traffic as they spread, rather than by the cost of reconstructing lost files or accounting for sensitive information that had been compromised.131

Second, network operations centers at universities and other institutions became full-time facilities, with professional administrators who more consistently heeded the admonitions to update their patches regularly and to scout for security breaches. Administrators carried beepers and were prepared to intervene quickly in the case of a system intrusion. And even though mainstream consumers began connecting unsecured PCs to the Internet in earnest by the mid-1990s, their machines generally used temporary dial-up connections, greatly reducing the amount of time per day during which their computers were exposed to security threats and might contribute to the problem.

Last, there was no commercial incentive to write viruses — they

were generally written for fun. Thus, there was no reason that substantial resources would be invested in creating viruses or in making them especially virulent.132

Each of these factors that created a natural security bulwark has

been attenuating as powerful networked PCs reach consumers’ hands. Universal ethics have become infeasible as the network has become ubiquitous: truly anyone who can find a computer and a connection is allowed online. Additionally, consumers have been transitioning to

36

always-on broadband,133 their computers are ever more powerful and therefore capable of more mischief should they be compromised, and their OSs boast numerous well-known flaws. Furthermore, many viruses and worms now have purposes other than simply to spread, including purposes that reflect an underlying business incentive. What seemed truly remarkable when discovered is now commonplace: viruses can compromise a PC and leave it open to later instructions, such as commanding it to become its own Internet mail server, sending spam to e-mail addresses harvested from the hard disk, or conducting web searches to engage in advertising fraud, with the entire process typically unnoticeable by the PC’s owner. In one notable experiment conducted in the fall of 2003, a researcher connected to the Internet a PC that simulated running an “open proxy,” a condition unintentionally common among Internet mail servers.134 Within ten hours, spammers had found the computer and had begun attempting to send mail through it.135 Sixty-six hours later, the computer had recorded an attempted 229,468 distinct messages directed at 3,360,181 would-be re-cipients.136 (The researcher’s computer pretended to forward the spam but in fact threw it away.137)

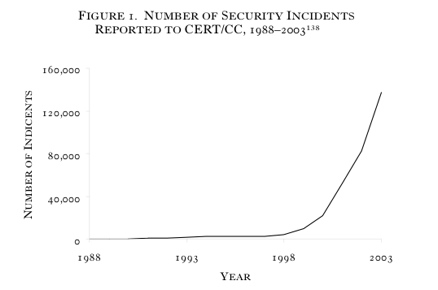

CERT Coordination Center statistics reflect this sea change. The

organization began documenting the number of attacks against Internet-connected systems — called “incidents” — at its founding in 1988. As Figure 1 shows, the increase in incidents since 1997 has been exponential, roughly doubling each year through 2003.

37

FIGURE 1. NUMBER OF SECURITY INCIDENTS

REPORTED TO CERT/CC, 1988–2003138

CERT announced in 2004 that it would no longer keep track of this figure because attacks have become so commonplace and widespread as to be indistinguishable from one another.139

At the time of the Morris worm, there were an estimated 60,000