We came up with four topics for future discussion. You may think of others. Because we have to file this on Friday, and that means if you want to contribute a thought, please do it by Wednesday.

We are also publishing a more detailed supplement on those four topics, explaining in depth why we propose those four topics, providing some foundation and providing resources. This we will not sent to the USPTO, but it is referenced in the document we will send, if they wish to read more. If you think my decision on that is flawed, let me know. But all they asked for is topics. If we overwhelm them with a lot of what they didn't ask for, I worry that it would be counterproductive. The USPTO will be publishing the comments they receive, and so both documents will be available to the world, and people can read it or not based on their interest level, which is part of the purpose. I would particularly appreciate your help with the supplement.

First, here's the document we will send to the USPTO, the list of the four proposed topics for discussions, after which you'll find the supplement. Feel free to comment on either document:

Response to the USPTO on the Second Topic:

Suggested Additional Topics for Future Discussion by the Software Partnership

In response to the USPTO's request for topics for future discussion by the Software Partnership, the technical community at Groklaw suggests the following four topics, in order of priority:

1: Is computer software properly patentable subject matter?

2: Are software patents helping or hurting innovation and

the US economy?

3: How can software developers help the USPTO

understand how computers actually work, so issued patents

match technical realities, avoiding patents on functions that

are obvious to those skilled in the art, as well as avoiding duplication of prior art?

4: What is an abstract idea in software and how do *expressions* of

ideas differ from *applications* of ideas?

In order to explain why these topics could be fruitful, here are some brief thoughts in explanation.

Suggested topic 1:

Is computer software properly patentable subject matter?

If software consists of two elements neither of which is patentable subject matter,

can software itself by patentable subject matter? Software consists of algorithms,

in other words mathematics, and data, which is being manipulated by the algorithms. Mathematics

is not patentable

subject matter and neither is data. On what basis, then,

is software patentable subject matter? We would welcome a

discussion on this topic, as it is a key issue to the developer community. Note

that Groklaw has published a number of articles on this topic, which can be found

at http://www.groklaw.net/staticpages/index.php?page=Patents2

A particular point of interest is how the meaning of data influences the patentable

subject matter analysis. Computers manipulate bits, and bits are electronic symbols

which are used to convey meaning. In some patents, like in Diehr's

industrial process

for curing rubber, what this meaning signifies is actually claimed clearly.

But in most pure

software patents the meaning is merely referred to. Should this distinction influence

whether the claim is patentable?

Suggested topic 2:

Are software patents helping or hurting the US innovation and

hence the economy?

It would be useful to hear from entrepreneurs on a wide scale on the effects software

patents are having on their startups or business projects. Microsoft's Bill Gates

himself has stated

that if software patents had been allowed when he was starting his business, he would have

been blocked.1a Is that happening to today's entrepreneurs? If

software authors are unable to clear

all rights to their own products because there is no practical way to do

so, how can that foster progress and innovation? Rather it seems to force

developer, or the companies that hire them, to

choose between developing innovative products with the certainty that if it is successful,

there will be

infringement lawsuits, or stop developing.

Every firm with an internal IT

department writes software. Every firm which maintains it own website writes

software. There are roughly 634,000 firms in the United States with 20 or more

employees. While not all of these firms produce software, many of the 1.7 million

firms with 5 to 19 employees do. In an ideal world, all firms should verify all

patents as they are issued to avoid infringement. This is a constant on-going

activity because corporate software must frequently be adapted to new needs and all

new versions may potentially infringe a patent. A study has concluded the task is

mathematically impossible to

accomplish.2a

Even if a patent lawyer only needed to look at a patent for 10 minutes, on average,

to determine whether any part of a particular firm's software infringed it, it would

require roughly 2 million patent attorneys, working full-time, to compare every

firm's products with every patent issued in a given year.

This is a mathematical impossibility because there are only roughly 40,000 registered

patent attorneys and patent agents in the US.

This estimation covers just the work of keeping up with the newly issued patents

every year. Checking already issued patents would require more attorneys.

This result follows from simple arithmetic. Suppose we assume that

40,000 patents are issued

per year, 600,000 firms actively write software, resulting in

approximately 2000 billable

hours per attorney per year. Then the math is straightforward: 40,000 patents*600,000

firms*(10 minutes per patent-firm pair)/(2000*60 minutes per attorney)=2 million

attorneys.

Compare lines of

code with sentences in a book. Each English sentence expresses an idea. Each

combination of sentences expresses a more complex idea. Then more and more complex

ideas are expressed into paragraphs, chapters etc. The total number of ideas from all

works of authorship is extremely large. Imagine an hypothetical intellectual property

regime where all such ideas are patentable. This would generate a large number of

patents. All authors must check all patents

for potential infringement. It simply is not possible to do so.

It is impossible to promote innovation with a system where the authors of software

have no way to verify they own all rights to their own work. Such a system is

guaranteed to harm the economy with monopolistic rent-seeking and unneeded

litigation.

Suggested topic 3:

How can software developers help the USPTO

understand how computers actually work, so issued patents

match technical realities, avoiding patents on functions that

are obvious to those skilled in the art, as well as avoiding duplication of prior art?

The current interpretation of patent law is plagued with what developers view as

erroneous conceptions of how computers work. Other than the current USPTO request for

input, developers feel shut out of decisions, and yet they are the very ones who

understand what software is and how it does what it does.

Textbooks describe in detail what mathematical algorithms are, but no one seems to

understand or to reference these sources. Instead, we see courts using

standard dictionaries, and the

result is confusion about what algorithms are. An unrealistic distinction between

so-called mathematical algorithms and those that purportedly are not mathematical has

been the result. Since this impacts the controversy of when a computer-implemented

invention is directed to a patent-ineligible abstract idea, it's a serious omission.

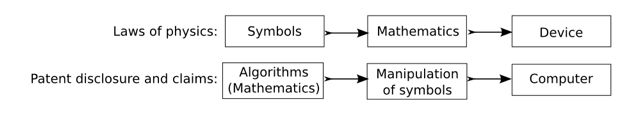

Second, it seems some, including some courts, believe the functions of software

are performed through

the physical properties of electrical circuits,

incorrectly treating the computer as a device which operates solely through the laws of

physics. This approach is factually and technically incorrect

because not everything in software

functions through the laws of physics. Bits are symbols, and they have meanings. The

meaning of bits is essential to performing the functions of software. The capability

of bits to convey meaning is not a physical property of the computer.

Software developers don't write software by working with the physical properties of

circuits. Developers define the meaning of data and implement operations of

arithmetic and logic that apply to the meaning. They debug software by reading the

meaning of the data stored in the computer and verifying whether the correct

operations are performed. The aspects of software related to meaning cannot be

explained solely in terms of the physical properties of the computer.

This erroneous physical view of the computer is the basis of an oft-stated

argument. Some claim that software alters the computer it runs on. This is used to

justify the view that software patents are actually a subcategory of hardware

patents. But to demonstrate what is wrong with that argument, let's compare a

printing press with a computer.

It is easy to see that the contents of a book is not a machine part. The meaning of a

book is not explained by the laws of physics applicable to a printing press. But the

comparison with a computer shows that there is no material difference in their

handling of meaning. Any argument applicable to a printing press is applicable to a

computer and vice-versa.

Imagine a claim on a printing press configured to print a specific book, say

Tolkien's Lord of the Ring. This is a claim on a machine which operates according to

the laws of physics. Printing is a physical process for laying ink on paper. It

functions without the intervention of a human mind. But still this process involves

the meaning of a book. The claim is infringed only if the book has the recited

meaning.

We may argue that a configured printing press is physically different from an

unconfigured one. The configured printing press can print a book while the

unconfigured one cannot. Books with different contents are different articles of

manufacture. Differently configured printing presses perform different functions

because they make different articles of manufacture. Therefore, as this hypothetical

argument goes, a printing press configured to print a specific book is a specific

machine which performs a specific practical and useful task.

Software patents are often written similarly to this analogy. Like a printing

press, the computer operates according to the laws of physics. It functions mostly

without the intervention of the human mind, although from time to time human input

may be required. But the process of computing needs the meaning of the data to

actually solve problems. The claim is infringed only if the data has the recited

meaning.

The standard argument that a programmed computer is different from an unprogrammed

one is exactly symmetric to the one we have just made about printing presses. There

is a reason for this. The technologies are not that different. A computer connected

to a printer can be configured to print a book. Also modern printing presses may be

controlled by embedded computers.

There is no material difference between a configured printing press and a programmed

computer in their handling of meaning. Users of a computer read the meaning of

outputs. They also enter the inputs based on the meaning. When programming a

computer, programmers must define the meaning of data. They implement algorithms

which perform operations of arithmetic and logic on the meaning of this data. When

debugging, programmers must inspect the internals of the computer to determine

whether the correct operations are being performed. This requires reading the

contents of computer memory and verifying it has the expected meaning. In other

words,

the act of making the invention depends on defining and reading the data stored in

the computer. Software works only if the data has the correct meaning.

The output of a printing process is a book. Different books are distinguished by

their contents. A typographer must define and verify the contents of the information

to be printed to configure the printing press correctly. In other words, the act of

making the invention depends on defining and reading the data stored in the printing

press. A printing press works precisely because it prints the right contents.

Printing makes a physical book which can be read and sold. Books with different

contents are different books. A wrongly configured printing press prints the wrong

book. Therefore the utility of the printing press doesn't depend just on the laws of

physics. It also depends on the contents of the book.

Both machines work in part according to the laws of physics and in part through

operations of meaning.

The courts have failed to acknowledge the role of meaning in software.

Some errors result from the failure to take into

consideration the descriptions of what is a mathematical algorithm in mathematical

literature. Other errors result from explicitly and incorrectly denying the role of

symbols and meaning in computers. And more errors result from the belief that

computers operate solely through the physical properties of electrical circuits.

Imagine now that every time a printing press prints a new book, you could patent that

printing press as a new machine because it printed a new book. That is exactly what

patent law does with software, purporting to create a new machine because new

software running on the computer supposedly creates a new machine. And yet the

computer, like a printing press, can run any software at all that you can devise,

just as a printing press can print any book you write.

No one

would allow a patent on a previously existing printing press just because it is now

configured to print a new novel. Yet that is exactly what is allowed with software.

The consequence is a proliferation of patents on the expressions of ideas.

Suggested topic 4:

What is an abstract idea in software and how do expressions of

ideas differ from applications of ideas?

Abstract is not synonymous with vague or overly broad. A mathematical algorithm is

narrowly defined with great precision, but still it is abstract.

Abstract is not the opposite of useful. The ordinary procedure for carrying an

addition is a mathematical algorithm. It has a lot of practical uses in accounting,

engineering and other disciplines. But still it is abstract. In particular it is

designed to handle numbers arbitrarily large no matter whether we have the practical

means of writing down all the digits. Besides, there are useful abstract ideas

outside of mathematics. For example the contents of a reference manual such as a

dictionary is both abstract and useful.

Mathematics is abstract in part because it studies infinite structures.

For example,

the series of natural numbers 0, 1, 2, ... cannot exist in the concrete universe,

because it is infinite. Also, symbols in a mathematical sense are abstract entities

distinct from the marks on paper or their electronic equivalent. For example, there

are infinitely many decimals of pi even though there is no practical way to write

them down. Infinity guarantees that mathematics is abstract. Therefore a definition

of "abstract ideas" must

acknowledge the abstractness of mathematics.

A proper

understanding of the role of meaning is key to understanding when a claim is directed

to a patent-ineligible abstract idea in software.

Software patents don't claim abstract ideas directly. They claim them indirectly

through the use of a physical device to represent them by means of bits. It would be

easier to recognize claims on patent-ineligible abstract ideas if it were understood

they take the form of claims on expressions of ideas as opposed to

applications of

ideas. The bits are symbols and the computation is a manipulation of the symbols.

Expressions of ideas occur through this use of symbols.

This suggests a test similar to the printed matter doctrine. This test is best

described using the concepts and vocabulary of a social science called semiotics.

This science studies signs, entities which are used to stand for something else.

Computers should be recognized to be what semioticians call sign-vehicles, physical

devices which are used to represent signs. The sign itself is an abstraction

represented by the sign-vehicle. Hence, sign-vehicles and signs are distinct

entities.

In semiotics the triadic notion of a sign distinguishes between two types of meaning.

There is the actual worldly thing denoted by the sign. This is called the referent.

And there is the idea of that thing a human being would derive from reading the sign.

This is called an interpretant. A sign usually conveys both types of meanings

simultaneously. An example might be a painting representing a pipe. The painting itself

is a sign-vehicle. People seeing this painting will think of a pipe. This thought is

an interpretant. An actual pipe is a referent.

If nothing has been invented but thoughts in the mind of human beings, we should not

be able to claim a sign-vehicle expressing these ideas as if they were applications

of the ideas. But when the real thing denoted by the expression is claimed, we may

have a patentable invention. For example a mathematical calculation for curing rubber

standing alone is not patentable under this test. It is just numbers letting a human

think about how rubber should be cured. But when the actual rubber is cured the

referent is recited and the overall process taken as a whole may be patentable. These

ideas lead to this test.

A claim is directed to a patent-ineligible abstract idea when there is no nonobvious

advances over the prior art outside of the interpretants. A claim is written to an

application of the idea when the referent is claimed instead of merely referred to.

This test is technology-neutral. It is applicable precisely when the claimed

invention is a sign, or when it is a machine or process for making a sign. It applies

whether the invention is software, hardware or some yet to be invented technology.

This test works without having to define the boundary between what is software and

what isn't.

The concepts of semiotics are quite simple and easy to define. They are related to

the dichotomy between ideas and expression of ideas in copyright law. Therefore this

test for abstract ideas helps clarify the line between what should be protected with

copyrights and what belongs to patent law. The expressions of interpretants may be

protected by copyrights and the corresponding referents may be protected by patents.

This test will correctly identify abstract mathematical ideas. Mathematics is, among

other things, a written language. It has a syntax and a meaning which are defined in

textbooks on topics such as mathematical logic. Algorithms in the mathematical sense

are features of this language. They are procedures for manipulating symbols. They

solve problems because they implement operations of arithmetic and logic on the

meaning of the symbols. Algorithms are also procedures which are suitable for machine

implementation. Computer programs may solve a problem only if it is amenable to an

algorithmic solution. In this sense, all software executes a mathematical algorithm.

Mathematical language refers to abstract mathematical entities such as numbers,

geometric shapes, etc. We assimilate this abstract meaning with interpretants.

Mathematical language may also be used to describe things in the concrete world, for

instance using laws of physics. The corresponding referents are applications of

mathematics. Mathematical algorithms and other types of mathematical subject matter

are a subcategory of interpretants. And things in the concrete world modeled using

mathematical language are a subcategory of referents. Hence the proposed test will

properly distinguish between the expression of a mathematical idea from an

application of the same idea. Claims of applications are accepted while claims on

expressions are rejected.

Groklaw has published this document here, along with a more detailed explanation, with references,

on why we believe these four topics could be fruitful topics for discussion.

__________

1a Gates exact words were:

"If people had understood how patents would be granted when most of today's ideas were

invented, and had taken out patents, the industry would be at a complete standstill

today."

"Challenges and Strategy" (16 May 1991).

Also from: http://bat8.inria.fr/~lang/reperes/

local/Challenges.and.Strategy

2a See Mulligan, Christina and Lee, Timothy B., Scaling the Patent System (March 6,

2012). NYU Annual Survey of American Law, Forthcoming. Available at SSRN:

http://ssrn.com/abstract_id=2016968.

The quoted paragraph is at pages 16-17.

And here is the more detailed explanation, the supplement. And this is where I really need your help. I didn't write it, although I've tried to organize it, but I'm still editing it to try to make it a little more conversational, so if you see errors or shades of meaning that are slightly off, please say so:

Supplement to the Response to the USPTO on the Second Topic

Detailed Explanations on the Proposed Topics

by Groklaw

The patent system should distinguish the expression of an abstract idea from an application of an idea. Currently the distinction between the two is not correctly made in the legal analysis of software patents. Expressions of ideas are being mistaken for applications and are patented as a result. This makes it confusing to try to define what is a claim directed to patent-ineligible abstract ideas.

Because of this confusion, innovation is not being promoted as intended. When expressions of ideas are patented, none of the assumptions underlying the operating principles of the patent system are true. Therefore the expected benefits of patents are not realized.

Recognizing that an expression is not an application would make it possible for the patent system to be able to recognize claims directed to abstract ideas and a major source of problems with software patents would be corrected.

A. Factual Background

Let's begin by defining some important terms and summarizing some fundamental facts about computers, software, and the underlying mathematical principles.1

1. Semiotics defines the concepts and vocabulary necessary to understand issues of meaning.

In the social science of semiotics, a thing that stands for something else is called a sign. Books and computers when associated with their meanings are examples of signs. Semiotics defines the concepts and vocabulary we need to properly analyze meaning. We present here the basic concepts which will be used in the rest of this response.

A sign in the Piercean tradition has three elements. The physical object used to represent the sign is called a sign-vehicle. The entity in the world which is denoted by the sign is called the referent. The idea a human being would form of the meaning of the sign is called an interpretant. This triadic view of a sign is traditionally represented as a triangle.

We may use the famous painting The Treachery of Images as an illustration of these notions. This painting represents a pipe with the legend "Ceci n'est pas une pipe" which means "This is not a pipe" in French. The point is that a painting of a pipe is a representation of a pipe. It is not the pipe itself. In this example, the painting is the sign-vehicle, the actual pipe is the referent, and the idea of a pipe in the human mind is the interpretant.

We have a sign when there is a convention on how to associate the sign-vehicle with its meaning. In the case of the painting this convention is the practice of associating a visual representation of something with what is represented.

Please note how the sign-vehicle is only an element of a sign. It is not the whole sign. The three elements must be brought together in order to have a sign. A human interpreter with the knowledge of the convention will mentally assemble the three elements and "make the sign" by associating the sign-vehicle with its meaning. This is a cognitive process of the human mind called

semiosis. In particular, books and computers when taken as physical objects independently of their meanings are sign-vehicles. They are not the whole signs because semantical elements are not physical parts of books and computers. These sign-vehicles are turned into signs by semiosis when a human interpreter read meanings into them.

2. Mathematics is a written language based on logic and algorithms are procedures for manipulating symbols in this language.

Mathematics is a written language. We can find the definition of its syntax and semantics in textbooks on the foundations of mathematics, especially mathematical logic.2 There is more to mathematics than the language. Mathematical entities like numbers and geometrical figures are also mathematics. But for the purpose of this discussion it is the linguistic aspect that matters most.

Concepts such as formulas, equations and algorithms are part of this mathematical language.

A mathematical formula is text written with symbols in this mathematical language. It is the equivalent of a sentence in English. An equation is a special kind of formula which asserts that two mathematical expressions refer to the same value.

The famous equation E=mc2 is an example of such a mathematical formula. The meaning of this formula is a is a law of nature, actually a law of physics. It is a statement relating the mass of an object at rest with how much energy there is in this object. This shows how mathematical language may be used to describe the real world.

The formula implies a procedure to compute the energy when the mass is known. Here it is:

-

Multiply the speed of light c by itself to obtain its square c2.

-

Multiply the mass m by the value of c2 obtained in step 1.

-

The result of step 2 is the energy E.

This kind of procedure is known in mathematics as an algorithm. The formula is not the algorithm. This procedure is the algorithm. Someone with sufficient skills in mathematics will know the algorithm simply by looking at the formula. This is why it is often sufficient to state a formula when we want to state an algorithm.

The task of carrying out the algorithm is called a computation. When carrying out the algorithm with pencil and paper we have to write mathematical symbols, mostly digits representing numbers but also other symbols such as the decimal point. These writings too are parts of mathematical language. In the example, the meaning of the writings are numbers representing the speed of light, its square, the mass and the energy of an object.

A function is not an algorithm.

Mathematicians distinguish between a function and an algorithm. Hartley Rogers explains:3 (emphasis in the original)

It is, of course, important to distinguish between the notion of algorithm, i.e., procedure, and the notion of function computable by algorithm, i.e., mapping yielded by procedure. The same function may have several different algorithms.

A mathematical function is a correspondence between one or more input values and a corresponding output value. For example the function of doubling a number associates 1 with 2, 2 with 4, 3 with 6 etc. Nonnumerical functions also exists.

A function is not a process. There is no requirement that the function must be computed in a specific manner. All methods of computation which produce the same output from the same input compute the same function.

Despite the similarly sounding words, a software function is not the same thing as a mathematical function. However the two concepts are closely related. If we look at the underlying principles of mathematics which are at the foundations of computer science the functions of software are described with mathematical functions.4 The methods used to perform the functions of software are implemented using mathematical algorithms.

The language of mathematics is based on logic

There is a close connection between logic and mathematics. Theorems are proven by means of deductions where the formulas and expressions in mathematical language are organized according to the rules of logic. Most mathematical truth are established in this manner.

The relationship between mathematics and logic is explained by Haskell Curry as follows:5 (emphasis in the original, footnote omitted)

The first sense is that intended when we say that "logic is the analysis and criticism of thought." We observe that we reason, in the sense that we draw conclusions from our data; that sometimes these conclusions are correct, sometimes not; and that sometimes these errors are explained by the fact that some of our data were mistaken, but not always; and gradually we become aware that reasonings conducted according to certain norms can be depended on if the data are correct. The study of these norms, or principles of valid reasoning, has always been regarded as a branch of philosophy. In order to distinguish logic in this sense from other senses introduced later, we shall call it philosophical logic.

In the study of philosophical logic it has been found fruitful to use mathematical methods, i.e., to construct mathematical systems having some connection therewith. What such system is, and the nature of the connection, are question which will concern us later. The systems so created are naturally a proper subject for study in themselves, and it is customary to apply the term 'logic' to such a study. Logic in this sense is a branch of mathematics. To distinguish it from other senses, it will be called mathematical logic.

…

[A]lthough the distinction between the different senses of 'logic' has been stressed here as a means of clarifying our thinking, it would be a mistake to suppose that philosophical and mathematical logic are completely separate subjects. Actually, there is unity between them. mathematical logic, as has been said, is fruitful as a means of studying philosophical logic. Any sharp line between the two aspects would be arbitrary.

Finally, mathematical logic has a peculiar relation to the rest of mathematics. For mathematics is a deductive science, at least in the sense that a concept of rigorous proof is fundamental to all parts of it. The question of what constitutes a rigorous proof is a logical question in the sense of the preceding discussion. The question therefore falls within the province of logic; since it is relevant to mathematics, it is expedient to consider it in mathematical logic. Thus the task of explaining the nature of mathematical rigor falls to mathematical logic, and indeed may be regarded as its most essential problem. We understand this task as including the explanation of mathematical truth and the nature of mathematics generally. We express this by saying that mathematical logic includes the study of the foundations of mathematics.

Mathematical logic is also part of the mathematical underpinnings of computer science.5

To summarize the main points, mathematics is a written language. It has a syntax and a semantics. It is used to establish theorems by means of logical proofs. Formulas and equations are expressions in this language. Computations and algorithms are elements of this language which are used to solve problems.

3. Mathematicians have defined their requirements for a procedure to be accepted as a mathematical algorithm.

If we seek a definition in the sense of a short dictionary-like description of an algorithm we won't find one which is universally accepted. But if we read textbooks of computation theory and mathematical logic we find full text descriptions of what it takes for a procedure to be a mathematical algorithm. These descriptions vary in their choice of words and some authors mention aspects others omit. It is best to read a few of them to obtain a complete picture.

Here are the collection of requirements for a procedure to be an algorithm which are mentioned by one or another of the authors cited in the footnote.6

Procedures to actually solve a category of problems

An algorithm is a procedure intended to actually solve a category of problems. It takes one of more inputs describing the specific problem and it produces the corresponding solution. This means the procedure is meant to be carried out, at least in principle if not in practice. If it is followed without error it will produce the correct answer. Stoltenberg-Hansen, Lindström and Griffor explain:7 (emphasis in the original)

An algorithm for a class K of problems is a method or procedure which can be described in a finite way (a finite set of instructions) and which can be followed by someone or something to yield a computation solving each problem in K.

Mathematicians sometimes call algorithms "effective procedures". This concept is broadly defined in intuitive terms because it is intended to be open ended. Researchers constantly discover new ways of defining and carrying out procedures able to actually solve problems. Their notion of algorithm isn't strictly defined because they don't want to exclude from the concept these future discoveries. When they need mathematical rigor mathematicians study specific models of computations like Turing machines, recursive functions or λ-calculus.

Manipulation of symbols

All algorithms are ultimately procedures for manipulating symbols. Stoltenberg-Hansen, Lindström and Griffor explain:8 (emphasis in the original)

It is reasonable to assume, by the intended meaning of an algorithm explained above, that each problem in K should be a concrete or finite object. We say that an object is finite if it can be specified using finitely many symbols in some formal language.

Sometimes we encounter a discussion of algorithms which presumes the computations are about numbers. For a mathematician the two concepts of arithmetic and symbolic computations are equivalent.

Boolos, Burgess and Jeffrey explain one aspect of this equivalence by pointing our that ultimately numbers must be represented by means of symbols when doing arithmetic calculations:9 (emphasis in the original, link added)

When we are given as argument a number n or pair of numbers (m, n), what we in fact are directly given is a numeral for n or an ordered pair of numerals for m and n. Likewise, if the value of the function we are trying to compute is a number, what our computations in fact end with is a numeral for that number. Now in the course of human history a great many systems of numeration have been developed, from the primitive monadic or tally notation, in which the number n is represented by a sequence of n strokes, through systems like Roman numerals, in which bunches of five, ten, fifty, one-hundred, and so forth strokes are abbreviated by special symbols, to the Hindu-Arabic or decimal notation in common use today.

Conversely, the same authors explains that symbols may be represented as numbers. Then symbolic computations may be defined in terms of arithmetical calculations:10 (emphasis in the original, link added)

A necessary preliminary to applying our work on computability, which pertained to functions on natural numbers, to logic, where the object of study are expressions of a formal language, is to code expressions by numbers. Such a coding of expressions is called a Gödel numbering. One can then go on to code finite sequences of expressions and still more complicated objects.

This may sound as a chicken and egg problem. Which is defined first? The manipulation of symbols or the manipulation of numbers? Actually is is impossible to manipulate numbers directly without first representing them as symbols of some sort. Even when a computation is defined as an operation of arithmetic it is ultimately a manipulation of symbols.

Finite description

An algorithm must be described with a finite number of symbols.11 It is not possible to learn and execute an procedure whose description is infinite. This requirement may seem obvious but much of mathematics is about infinite structures, like the set of natural numbers or the decimal expansion of pi. Mathematicians felt the need to set this boundary for themselves.

Precise definition

The steps must be defined precisely so we know exactly how to execute them. Boolos, Burgess and Jeffrey describe this requirement as follows:12

The instruction must be completely definite and explicit. They should tell you at each step what to do, not tell you to go ask someone else what to do, or to figure out for yourself what to do: the instructions should require no external source of information, and should require no ingenuity to execute, so that one might hope to automate the process of applying the rules, and have it performed by some mechanical device.

Actual execution

A procedure doesn't solve a problem unless and until it is actually executed. The requirements finite description and precise definition are meant to instruct exactly how the procedure should be executed.13

This requirement of actual execution has a consequence. An algorithm imposes a burden on the computing agent that executes it. The steps must be actually carried out and the symbols must be actually written. This burden is called computational complexity. It is measured by the number of steps which must be executed and by the amount of writing space required to write the symbols. This burden typically vary according to the size of the inputs. When the number of symbols in the inputs is larger the number of steps and the storage space required to read and process the inputs will also increase.

Independence from physical limitations

Mathematicians assume the agent executing the algorithm has unlimited time to carryout the computation and unlimited space to write symbols while computing. The goal is to separate the mathematical properties of the algorithm from the physical resources available to compute. Boolos, Burgess and Jeffrey describe this requirement as follows:14 (emphasis in the original)

There remains the fact that for all but a finite number of values of n, it will be infeasible in practice for any human being, or any mechanical device, actually to carry out the computation: in principle it could be completed in a finite amount of time if we stayed in good health so long, or the machine stayed in working order so long; but in practice we will die, or the machine will collapse, long before the process is complete. (There is also a worry about finding enough space to store the intermediate results of the computation, and even a worry about finding enough matter to use in writing down these results: there is only a finite amount of paper in the world, so you'd have to writer [sic] smaller and smaller without limit; to get an infinite number of symbols down on paper, eventually you'd be trying to write on molecules, on atoms, on electrons.) But our present study will ignore these practical limitations, and work with an idealized notion of computability that goes beyond what actual people or actual machines can be sure of doing. Our eventual goal will be to prove that certain functions are not computable, even if practical limitations on time, speed and amount of material could somehow be overcome, and for this purpose the essential requirement is that our notion of computability not be too narrow.

It is understood that an algorithm will be carried out in practice by a computing agent with finite amount of time and writing space, therefore the computation can only be done for a "finite number of values of n" as Boolos and al. put it. This doesn't mean the calculation isn't done according to the algorithm. It means that the algorithm is carried out only to the extent that sufficient resources are available. When the resources are exhausted the calculation stops prematurely and the answer is not reached.

For example consider the ordinary pencil and paper procedure of arithmetic for adding numbers. It is designed to produce the correct answer no matter how many digits are required to write the numbers. If the numbers have one trillion digits it may not be realistic to expect a live human to complete the task. Mathematicians still regard this procedure as a mathematically correct algorithm for addition. They consider that finding a computer powerful enough to carry out the task until completion is a separate issue from finding a mathematically correct procedure.

The purpose of this abstraction is to study the mathematical properties of the computation in itself, independently from the limitations of the computing agent. For example mathematicians want to know when a function cannot be computed at all regardless of the physical resources available. And they want to be confident that the algorithm produces the correct answer for all inputs. This procedure gives us a mathematical guarantee that an increase of the capabilities of the hardware will increase the range of computations which are practical without introducing errors because the algorithm is not limited to the capabilities of the current hardware.

Termination

This requirement is controversial.15 In some flavors of 'algorithm' it is omitted.

People who expect the algorithm to actually produce the answer demand that there is a point in time where the answer is available. This means there must be a finite number of steps after which the procedure is completed and the answer is available. But there are useful computational procedures which cannot meet this requirement. Stoltenberg-Hansen, Lindström and Griffor explain:16 (emphasis in the original)

The requirement that an algorithm should solve each problem in a class K is actually a requirement on the class K (to be algorithmically decidable) rather than on the concept of an algorithm. Indeed the notion of an algorithm is partial by its very nature. Regarding an algorithm as a finite set of instructions, there is certainly no a priori reason to expect the computation, obtained from applying the algorithm to a particular problem, to terminate.

An example is a procedure for computing the decimals of pi. This calculation can never be carried out until the end because there are infinitely many decimals. On the other hand it can compute the decimals of pi to an arbitrary degree of precision if we have the patience to carry it out long enough.

The main mathematical models of computation17 have the ability to define both algorithms which terminate and computational procedures which don't terminate.

Deterministic execution

This requirement is controversial.18 In some flavors of 'algorithm' it is omitted.

Some people expect the requirement of precise definition to imply that every step be deterministic, with no random element. But there are useful computational procedures that involve probabilistic steps, that is some steps have an outcome randomly selected from a predefined set of possibilities. This is called a randomized algorithm.

It is well know that a randomized algorithm can be transformed into a deterministic algorithm when a source of random numbers is available as an input. Then the random element is moved out of the calculation to the source of input. The calculation itself is deterministic relative to the input. Alternatively, pseudo-random number generators may be used to simulate nondeterminism by deterministic means.

4. Algorithms are machine-implementable because they rely only on syntax to be executed, but they solve problems because they implement operations of arithmetic and logic on the meaning of the data.

The requirement of precise definition permits the machine execution of algorithms. See the preceding quote of Boolos et al. An alternative statement of this requirement is given by Stephen Kleene as follows:19

In performing the steps we have only to follow the instructions mechanically, like robots; no insight or ingenuity or intervention is required of us.

If the meanings of the symbols are ambiguous it is impossible to execute the algorithm in this manner. Resolving the ambiguity is an intervention that requires insight or ingenuity. This requirement would not be met. On the other hand if the symbols are unambiguous, for purposes of executing an algorithm mechanically, like robots, their meanings are superfluous. For example, when evaluating a single bit there is no need for the step of noticing the symbol means the boolean value true when we already know the symbol is the numeral 1 because this numeral always means true in boolean context.

For the sake of comparison, here is an example of a procedure which is not an algorithm: Interim Examination Instructions For Evaluating Subject Matter Eligibility Under 35 U.S.C. § 101 (PDF). Legal procedures such as this one require the human to consider the meaning of the information and then inject additional information based on his experience, knowledge, and convictions to reach a decision. They require a lot of insight and ingenuity to be executed and for this reason they are not mathematical algorithms.

A consequence of this requirement is that the algorithm operates only on the syntax of the mathematical language. It doesn't operate on the meaning. This point has been noticed by Richard Epstein and Walter Carnielli20, where they describe a series of models of computations used to define classes of algorithms:21

What all of these formalizations have in common is that they are all purely syntactical despite the often anthropomorphic descriptions. They are methods for pushing symbols around.

Human beings may be taught procedures to process data based on their meanings. Computers can't. They must be programmed to execute algorithms. This is a prerequisite for writing a machine executable program. If the procedure is not an algorithm it is not possible to program a computer for it.

But then what is the role of meaning? It defines the problem and its solution. There is a whole body of computation theory which analyzes computation from the point of view syntactic manipulations of symbols. But this literature is limited in the study of which problems are solved by these algorithms. For that we need the meaning.

The art of the programmer is to find an algorithm which corresponds to operations of arithmetic and logic that solves the problem.

As a first step the programmer must define how the data elements will be representing symbolically, with bits.22 This task is referred to with phrases such as: defining a data model, defining data structures and defining data formats. This task amounts to defining how to represent the problem and its solution in a suitable language of symbols. Then as a second step the programmer must find an algorithm operating on this data that will produce the correct outputs. This means the programmer must find a way to manipulate the symbols without referring to their meanings and still reach the correct answer. If the programmer fails to to find such a procedure he cannot write a machine executable program.

The connection between logic and data is key. Well chosen logical inferences can solve practical problems. They can be turned into algorithm using data types. Consider the following series of statements.

-

"Abraham Lincoln" is a character string.

-

"Abraham Lincoln" is the name of a human being.

-

"Abraham Lincoln" is the name of a politician.

-

"Abraham Lincoln" is the name of a president of the United States of America.

Each of them is attaching a data type to the character string "Abraham Lincoln". Most computer languages are only concerned with the data type in line 1. This is all they need to generate executable code. But logicians have been interested in more elaborate forms of data typing. Each of the statements mentions a valid data type in this logical sense. Logicians have noticed that data types corresponds to what they call "predicates" which are templates to form propositions that are either true or false. For example "is the name of a president of the USA" is a predicate. If you apply it to "Abraham Lincoln" you are stating the (true) proposition that "Abraham Lincoln" is the name of a president of the USA. And if you attach the same predicate to "Albert Einstein" you get a similar but false proposition.

When writing a program, programmers must first define their data. They don't just define the syntactic representation in terms of bits. They also define what the data will mean. A logician would say they define the logical data types, the predicates which are associated with the data. These predicates are documented in the specifications of the software, in comments included in the source code or in the names they give to the program variables. This knowledge is essential in understanding a program. However these predicates are not used for generating machine executable instructions. The predicates are not used by the computer for the manipulation of the symbols. During execution the predicates are implicit. They are defined by a convention the reader must know in order to be able to read the symbols correctly. They are for human understanding and verification that the program indeed does what it is intended to do.23 And they are also for the user of the program as he needs to understand the meanings of the inputs and outputs in order to use the program properly.

Data types in this extended logical sense relate to algorithms as follows. If you expect the data to be of some type, then the data is implicitly stating a proposition. You can tell which proposition by applying the predicate to the data. If you expect a quantity of hammers in your inventory and the data you get is 6, then you implicitly have a statement that you currently have 6 hammers in stock. All data is implicitly the statement of a proposition corresponding to its logical type.

When the algorithm process the data it implicitly carries out logical inferences on the corresponding propositions because a correctly working program must always produce data of the correct type. For example if you ask a program for the birth date of Theodore Roosevelt and the program returns October 27, 1858, it implicitly states that this is the birth date of Theodore Roosevelt because this is the proposition corresponding to the expected data type. The definition of correctness for a program is that it produces a logically correct answer. Programmers are well aware of this correspondence between predicates, data and correctness. They use it to design, understand and verify their programs.

There is a whole body of theory on how algorithms correspond to logic based on logical data types. The Curry-Howard correspondence is part of this theory. It works like a translation, similar to translating between Russian and Chinese, except that the translation is between two mathematical languages. If the algorithm is expressed in the language of λ-calculus then the Curry-Howard correspondence translates the algorithm into a proof of mathematical logic expressed in the language of predicate calculus. The translation works also in the other direction. Proofs of mathematical logic may likewise be translated into algorithms. In this sense an algorithm is really another expression for rules of logic. The difference is a matter of form and not substance.

As an alternative, when the algorithm is written in an imperative language instead of λ-calculus it may be assigned a logical semantics using Hoare logic. Poernomo, Crossley and Wirsing argue that Hoare logic is what the Curry-Howard correspondence becomes when it is adapted to imperative programs.24

To summarize the main points, algorithms are machine executable because their execution depends only on syntax with no need for a human to interpret their meaning. But they solve problems because the symbols have meanings.

5. All computations carried out by a stored program computer are mathematical computations carried out according to a universal mathematical algorithm.

Mathematicians have discovered that some algorithms have a universal property. They can compute all possible computable functions provided they are given some corresponding input called a program. Universal algorithms makes possible to build general purpose computers. When we have a machine able to compute a universal algorithm we can make it compute any function of our choosing by supplying it with the corresponding data. This is the difference between making a machine dedicated to carrying out a single algorithm and software.

When a general purpose computer built in this manner every program ends up being executed by the universal algorithm. Therefore every computation in is a mathematical computation according to a mathematical algorithm. This phenomenon is often referred to by the slogan "software is mathematics". This notion is often repeated on Groklaw.

Several universal algorithms are known. Here is a selection among the main ones.

There is SLD resolution which is used in the logic programming paradigm and languages such as

Prolog. SLD resolution is a universal algorithm which applies rules of logic to the data.25

In

functional programming

various implementations of

normal order β-reduction

are used.26 These

universal algorithms are used in languages derived from

λ-calculus

like

LISP.

Instruction cycles are the preferred universal algorithms for imperative programming which is the most widely used programming paradigm. Instruction cycles have both hardware and software implementations. A hardware implementation results into the

stored program computer architecture which is the dominant way of making general purpose programmable computers. Software implementations often take the form of virtual machines or bytecode interpreters.

The universal Turing machine plays an important role in the theoretical foundations of computer science. It played a role in the birth of computation theory. It has also been inspiration behind the invention of the stored program computer. Unlike the previously mentioned universal algorithm it is not used for actual computer programming.

When a universal algorithm is implemented in software the computer needs to be programmed twice. The first program uses the native instructions of the computer to implement the universal

algorithm in software. The second program is the data given to the software universal algorithm.

The instruction cycle works as follows, assuming a hardware implementation in a stored program computer.27

-

The CPU reads an instruction from main memory.

-

The CPU decodes the bits of the instruction.

-

The CPU executes the operation corresponding to the bits of the instruction.

-

If required, the CPU writes the result of the instruction in main memory.

-

The CPU finds out the location in main memory where the next instruction is located.

-

The CPU goes back to step 1 for the next iteration of the cycle.

As you can see, the instruction cycle executes the instructions one after another in a sequential manner. In substance the instruction cycle is a recipe to "read the instructions and do as they say". This instruction themselves don't execute anything. They are data read and acted upon by the CPU.28

Not all universal algorithms use instructions as their input as the instruction cycle does. It is incorrect to assume every computer program is made of instructions because some programming languages target universal algorithms that don't use instructions as their input.

B. Some Errors of Facts Found in Arguments About Software and Patents

This section enumerates a few errors of facts that poison the discussion about software and patents. It is subdivided in several subsections, each of them is dedicated to one error. We explain what the error is and how it poisons legal arguments about abstract ideas and their applications. Each subsection is a standalone argument. Each of the errors is damaging and must be corrected.

The overarching argument for this section B is that these errors have a cumulative effect. Collectively they solidify the erroneous notion that the functions of software are performed solely through the physical properties of electrical circuits. Each error either disregard or deny the role of symbols and their meaning in computer programming. Then, the cumulative effect is that the expression of an abstract idea is conflated with the application of an idea. As a result, oftentimes patents on the expressions of abstract ideas are granted because they have been mistaken for the applications of these ideas.

1. The proper understanding of the term "mathematical algorithm" is the one given by mathematicians.

Historically the courts have had problem understanding the term "mathematical algorithm". For example the Federal Circuit stated in

AT&T Corporation vs Excel Communications:

Courts have used the terms "mathematical algorithm," "mathematical formula," and "mathematical equation," to describe types of nonstatutory mathematical subject matter without explaining whether the terms are interchangeable or different. Even assuming the words connote the same concept, there is considerable question as to exactly what the concept encompasses.

Also see in re Warmerdam:

The difficulty is that there is no clear agreement as to what is a "mathematical algorithm", which makes rather dicey the determination of whether the claim as a whole is no more than that.

Part of the problem lies in the definitions they have used. Courts are not referring to the work of mathematicians when they try (and so far fail) to understand the meaning of this term.

In Gottschalk v. Benson the Supreme Court describes the term algorithm like this:

A procedure for solving a given type of mathematical problem is known as an "algorithm."

This is not an altogether wrong one-sentence summary, but it is too concise to be a complete definition. The details of the mathematically correct notion cannot be known if this sentence alone is used as the sole source of information.

In Typhoon Touch Technologies, Inc. v. Dell, Inc. the Federal Circuit explained their understanding:

The usage "algorithm" in computer systems has broad meaning, for it encompasses "in essence a series of instructions for the computer to follow," In re Waldbaum, 59 CCPA 940, 457 F.2d 997, 998 (1972), whether in mathematical formula, or a word description of the procedure to be implemented by a suitably programmed computer. The definition in Webster's New Collegiate Dictionary (1976) is quoted in In re Freeman, 573 F.2d 1237, 1245 (CCPA 1978): "a step-by-step procedure for solving a problem or accomplishing some end." In Freeman the court referred to "the term `algorithm' as a term of art in its broad sense, i.e., to identify a step-by-step procedure for accomplishing a given result." The court observed that "[t]he preferred definition of `algorithm' in the computer art is: `A fixed step-by-step procedure for accomplishing a given result; usually a simplified procedure for solving a complex problem, also a full statement of a finite number of steps.' C. Sippl & C. Sippl, Computer Dictionary and Handbook (1972)." Id. at 1246.

In particular the court in In re Freeman decided that these definitions of this term are more or less synonymous with process. Consequently the courts have tried to narrow down the understanding of mathematical algorithm to a subcategory of algorithms that the courts would deem "mathematical".

Because every process may be characterized as "a step-by-step procedure * * * for accomplishing some end," a refusal to recognize that Benson was concerned only with mathematical algorithms leads to the absurd view that the Court was reading the word "process" out of the statute.

This is where the problem occurs. The definitions the courts have used provide no insight into what makes an algorithm mathematical. As a result, the courts don't have the information they need to distinguish a mathematical algorithm from a process in the patent-law sense.

Mathematicians have told us what a mathematical algorithm is. The courts should use this definition. Then they would know what an algorithm is in the mathematical sense of the term.

A mathematical algorithm is a procedure for manipulating symbols which meet the additional requirements we have given above.29 We can ensure an algorithm is "mathematical" by verifying it meets the requirements of mathematics.

It happens that the computations carried out by a computer always meet these requirements. The slogan "software is mathematics" is often used to refer to this conclusion. We may reach that conclusion in several ways. For the purposes of this response, it suffices to mention three of them.

First, we may just compare the manipulation of bits in a computer with the requirements of mathematics to see that there is a match. An algorithm is a procedure that solves problem through the mechanical execution of a manipulation of symbols. A programmer must find a way to solve the problem exclusively by syntactic means, without having the machine to refer to the semantic. This obligation is what ensures the algorithm always meet the requirements of mathematicians for an algorithm to be a mathematical algorithm.

The second way to reach this conclusion is to observe that software is always data given as input to a universal algorithm. Given that the universal algorithm is mathematical then the computation must be the execution of a mathematical algorithm

A third way to reach a similar conclusion is to use a programming language approach. We may ask whether the claimed method is implementable in the Concurrent ML extension of the programming language Standard ML. The official definition of the language specifies in mathematical terms which algorithm must be executed when a program is executed. Concurrent ML extends this specification to input/output routines and various concurrent programming constructs. A program written in this language is guaranteed to correspond to a mathematical algorithm given by the definition of the language.30

The patent eligibility of a computer implemented invention hinges on whether the claim is directed at an application of the mathematical algorithm as opposed to the algorithm itself. A logical conclusion of "software is mathematics" in the sense above is that any threshold test of whether a mathematical algorithm is present in the invention is always passed when software is used. Attempts to distinguish computer algorithms that are 'mathematical' from those which are not are contrary to the principles of computer science. Then the section 101 analysis must proceed to whether the claim is directed to a patent-eligible application of the algorithm as opposed to the patent-ineligible abstract idea. A proposal for doing this will be presented in section C below.

2. The vast majority of algorithms can carried out in practice for smaller inputs and are impractical for larger inputs.

Mathematicians know that algorithms must be executed in practice in order to actually solve problems. A procedure which can't be actually carried out won't solve anything. But still they have made a conscious decision to ignore the practical limitations of the computing agent in their criteria for accepting a procedure as an algorithm.31 This is in direct conflict with a frequently stated legal argument. Some people argue that whether or not the computation can be implemented in practice is one of the distinguishing factors between abstract mathematics and an application of mathematics.32 In one version of this argument it is argued that if the algorithm is hard to implement in practice then this is evidence that it is not an abstract idea.

The problem with this argument is that the burden of carrying out the steps on an algorithm increases with the quantity of data present in the input. There are few exceptions, but typical algorithms require at least to read their inputs. Then logically, if the size of the inputs increases, more work is required to read them. Then the input must be processed and again the amount of work increases with the quantity of data to be processed. All typical algorithms are practical to use for a small enough size of inputs. And all typical algorithms are impractical when the size of the data grows over a certain limit. Therefore all typical algorithms will both pass and fail the "can be implemented in practice" test depending on the size of the input.

In particular all ordinary arithmetic calculations may be either practical or impractical depending on how many decimals are required to write the numbers. Doubling the number pi with a precision of one trillion decimals is not something a human doing pencil and paper calculations can achieve in his lifetime. A computer can do it but there is no limit to infinity. If we increase the number of decimals to a high enough value the calculation is impractical on the fastest computers. This statement will remain true no matter how powerful our computer may be because their capacity will always be finite.

This is the point of the abstraction mathematicians have made. By ignoring the practical limitations they separate the mathematical properties of the algorithm from the capabilities of the computing agent. A test of whether the method may be carried out in practice is not testing for what a mathematical algorithm actually is.

We may compare algorithms with legal arguments. Courts enforce limits on the number of pages a brief may have and on the duration of oral arguments. Lawyers must find concise arguments that fall within these limits. This is not inventing a smaller stack of paper covered with ink or a faster process for emitting sounds in a courtroom. The argument remains an abstract idea even though concision makes it fit within physical limits.

Computations are mathematical written utterances because they are procedures for writing symbols. When a better algorithm is found the utterances are more concise. This is still a mathematical algorithm. The comparison with a legal argument is applicable because according to the Curry-Howard correspondence an algorithm is an expression of logic.33 An algorithm solves problems precisely because it uses sound principles of logic and arithmetic to derive the solution.

3. The printed matter doctrine should be applicable to computations.

What happens when the only new and nonobvious advances over the prior art are in the meaning of the symbols? For books and printing processes there is the printed matter doctrine. It is well understood that stories of hobbits traveling in faraway countries are not physical elements of books or printing processes. The contents of the book cannot be used to distinguish the invention over the prior art.

As far as Groklaw can tell there is no analogous doctrine about computations carried out by computers. This is a problem. There should be one.

Please take a pocket calculator. Now use it to compute 12+26. The result should be 38. Now give some non mathematical meanings to the numbers, say they are counts of apples. Use the calculator to compute 12 apples + 26 apples. The result should be 38 apples. Doe you see a difference in the calculator circuit? Here is the riddle. What kind of non mathematical meanings must be given to the numbers to make a patent eligible difference in the calculator circuit? Answer: it can't happen.

This example carries to programming. There is no difference in the computer structure between an instruction to add 12+26 and an instruction to add 12 apples + 26 apples. There is no difference in a computer structure between doing a calculation for the same of knowing the numerical answer and doing a calculation because the numbers mean something in the real world. If we use a computer to compute the trajectories of satellites in orbit the satellite and their trajectories are not computer parts. There are only instructions for manipulating numbers.

There are two issues there. First, if meaning doesn't make a difference in the computer structure then we can't argue a new specific machine is made on that basis alone. Second the difference between a pure mathematical calculation and and application of mathematics is the meaning given to numbers and other mathematical entities in the real world.

When the innovation lies strictly in the meaning with no physical difference in the machine then there is no patentable invention. At the very least the invention cannot be a machine or the operating process of a machine. We need a doctrine analogous to the printed matter doctrine to recognize these situations.

Here is another way to make the same point. Consider this claim.

A [computer / printing press] comprising a printing device and a microprocessor configured to use the printing device to print the story of hobbits traveling in faraway countries to destroy an evil anvil.

The words in brackets indicate a choice. This claim may be written indifferently to a computer or a printing press. A computer may be connected to a printed. A printing press may have an embedded microprocessor to control it. Logically the printed matter doctrine should apply in both scenarios. Even if we assume that destroying an anvil instead of a ring is a new and nonobvious improvement over Tolkien's The Lord of the Ring both claims should still be invalid.

We have an example of a claim where the printed matter doctrine should be applicable to a programmed computer. This raises the issue of where and how do we draw the line between claims where this doctrine applies and where it doesn't.

Technology does not provide a way to draw this line. For example we cannot distinguish between instructions and data. It is always possible to program a printing process with instructions which in effect mean "print the letter T, print the letter h, print the letter e, etc" until the whole book is printed. Or alternatively we can use a universal algorithm which does not rely on instructions as its input to transform any algorithm into non executable data. If the test relies on a technological difference then no matter how the courts may wish to define this test programmers will be able to arrange matters in such manner that their program falls on the side of the line they want. But if the test doesn't rely on technology, it is hard to see how can it be argued that a machine or its operating process has been patented.

We can make the argument for a computing version of the printed matter doctrine in a third manner. Let's compare the printing process with computing. Printing is a physical process which is performed through the physical properties of ink and paper. This process functions automatically without the intervention of a human mind. The specific book being printed is determined by data given as input to the printing press.

In a stored program computer, the computation is carried out by executing the instruction cycle. This instruction cycle is a physical process which is performed through the physical properties of an electrical circuit. This process functions automatically without the intervention of a human mind. The specific calculation is determined by data given as input to the instruction cycle.

There is no factual difference which would justify applying the printed matter doctrine to a printing process but not to the instruction cycle. The relationship between the meaning of symbols and the machine is exactly the same in both cases.

The Federal Circuit guidance given in in re Lowry is unhelpful:

The printed matter cases "dealt with claims defining as the invention certain novel arrangements of printed lines or characters, useful and intelligible only to the human mind." In re Bernhart, 417 F.2d 1395, 1399, 163 USPQ 611, 615 (CCPA 1969). The printed matter cases have no factual relevance where "the invention as defined by the claims requires that the information be processed not by the mind but by a machine, the computer." Id. (emphasis in original).

It is hard to see how this guidance can work.

A claim on a

configured printing press

requires that the information be processed not by a human mind but by a machine, the printing press. The issue of meaning arise whether or not the human mind is an element of the process.

Software is much more likely to require the use of a human mind than a printing press. Many computer programs are interacting with their users, providing outputs and requiring inputs. In these circumstances part of the data processing is done by a human mind

because human decisions are involved.

On the other hand a printing press replicates the printed matter in an entirely automatic manner.

This is not the only flaw in

Lowry.

There isn't information useful and intelligible only to a human mind anymore. Authors write books using word processors. Books exist in electronic form and printing devices are controlled by computers. Printed

characters are machine readable through

optical character recognition (OCR)

technology. The

Perl programming language

has been designed for the express purpose of processing text and it can process the result of an OCR scan.

The preceding paragraph assumes that "intelligible to a machine" means the ability to recognize the characters and process their syntax. If "intelligible" means the faculty to relate the syntax with meaning computers don't do that.34 This is precisely why programmers must use algorithms to solve problems.35

The Lowry test for the applicability of the printed matter doctrine is arbitrary and illogical. It is not consistent with technological reality.

4. The language used in the disclosure and claims in software patents rely on an inversion of the normal semantical relationships between symbols and their meaning.

The error discussed in this section occurs when this inversion of the normal semantical relationship is not acknowledged. This leads to a contradictory reading of Supreme Court precedents on section 101 subject matter patentability. But if the inversion is taken into account it is easy to see that Supreme Court precedents are consistent.

Let's use ink and paper as an analogy. The marks of ink represent letters and the letters form words which have meanings. So the normal semantical relationship goes from the physical substrate, the ink, to the symbol, the letter. And then it goes from the letters to the words, from the words to the sentences and ultimately to the meaning of the sentences.

This observation is applicable to the mathematical foundations of computing. Mathematics is a written language. The semantical relationships follow the normal progression, from ink to the symbols, from the symbols to the syntax of mathematical language and then to the mathematical entities like numbers which are denoted by the symbols. Then there is an additional relationship between math and whatever in the universe is described by means of math.

This applies to algorithms too. An algorithm is a mathematical procedure to solve problems, like the ordinary pencil and paper arithmetical calculations we have learned in school. When computing the computer write symbols that have the same semantical relationships. An algorithm is a procedure for writing and rewriting the symbols until we arrive at a solution of the problem.

We find in computers the same semantical progression except that the symbols are not written on paper. Computers use electrical, magnetic and optical phenomenons to represent bits. The bits are symbols representing boolean values and numbers. Finally the numbers means whatever in the universe is described by the numbers. The computations are manipulations of the bits according to some algorithm. This is part of mathematical foundations of computer science.

Sometimes we may invert this semantical relationship. For example we may enter a bookstore and ask for the book where hobbits travel in faraway countries where live elves and orcs to destroy an evil ring. Then the store keeper will bring a copy of Tolkien's Lord of the Ring. This is describing the physical book by referring to its contents. Instead of using the book to tell the novel we use (an outline of) the novel to refer to the book.

This inversion of the semantical relationship occurs in software patents. The functions of the software are disclosed and claimed. This language is written in terms of the meaning of the data. If the patent is on a payroll system the functions of the software will be described in terms of employees, wages and deductions for example. The expectation is that a skilled programmer will be able to write the software from this disclosure. Also, the claim is presumed to describe a new machine which is a programmed computer. The claimed invention is either this machine or some machine process such as transistors turning on and off while carrying out the functions of the software. This is describing the physical device in terms of its meaning, exactly like the physical book may be described by an outline of the novel.